For decades, AI progress meant bigger models, faster GPUs, and larger datasets. But what if there were a fundamentally different, and possibly more efficient way? A way that’s more flexible and fault-tolerant? A way that only needs a tiny fraction of the power to run? Neuromorphic computing, which aims to mimic the human brain in processors, represents that promise. In this article, we’ll explore the pros, cons, and use cases for this fascinating technology. And we’ll seek a level-headed answer to the question, is neuromorphic computing the future of AI?

What Exactly Is Neuromorphic Computing?

Put simply, neuromorphic computers try to mimic the human brain both in structure and in function. They are physical processors packed with artificial neurons and synapses that imitate how a real brain learns. But what exactly does that mean? And how is that different from neural networks run on our current hardware?

How does neuromorphic computing work?

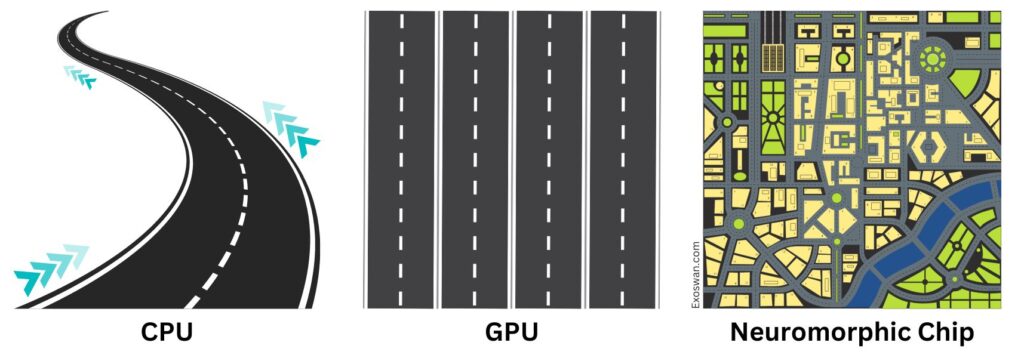

Think of traditional computing with CPUs as a single-lane superhighway. Information travels in neat packets (data bits), following specific routes (algorithms) to reach their destination (output). CPUs excel at this organized, sequential processing. But they struggle with large, messy datasets that require constant adaptation.

GPUs, which are the backbone of modern AI, are like an upgraded multi-lane highway. Each lane is a bit slower than the single-lane superhighway, but this one’s built for parallel processing. It handles vast amounts of data by dividing it into smaller streams, processing them simultaneously, and then reassembling the final result. This is much more efficient for specific tasks like deep learning, but still relies on predefined algorithms.

Continuing this analogy, neuromorphic chips would be a bustling cityscape. Information flows through a network of interconnected streets (connections between artificial neurons). These “neurons” are not simple on/off switches like in traditional CPUs. They mimic biological neurons, sending electrical pulses (spikes) whose strength determines the message. Connections between neurons are also constantly updating, mimicking the brain’s learning process. In theory, these chips can handle complex data in real time, learn in unpredictable environments, and use much less energy.

Neuromorphic computing vs neural networks

NB: While they share many of the same terms, neuromorphic computers should not be confused with the neural networks used in deep learning. Deep learning is a software algorithm mainly still run on traditional hardware, while neuromorphic computing is a fundamentally new approach to both hardware and software.

Also, deep learning usually relies on backpropagation, an error-correction method, to learn. On the other hand, neuromorphic systems focus on biologically-inspired learning rules like Spike-Timing-Dependent Plasticity (STDP). This represents a completely new learning paradigm than the predominant one we use in AI today.

How does one actually make a neuromorphic chip?

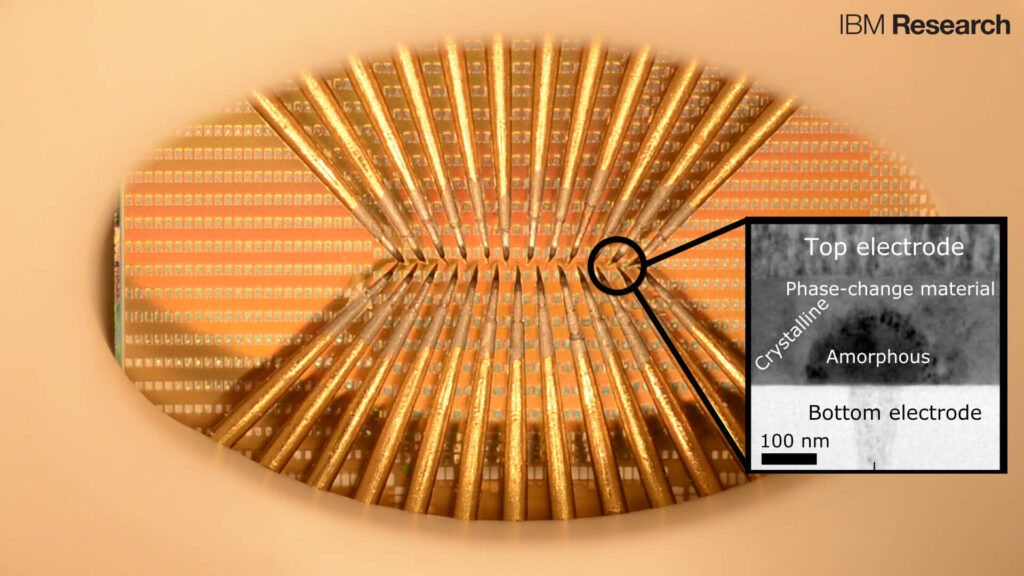

While early neuromorphic systems used silicon, current research focuses on novel materials. Memristors, for example, could be the missing link. Memristors were theorized in 1971 as a hypothetical fourth fundamental circuit element alongside resistors, capacitors, and inductors. However, it wasn’t until 2008 that HP Labs demonstrated a working physical example.

A memristor’s key feature is that its electrical resistance isn’t fixed. It changes depending on the voltage that has passed through it, essentially “remembering” past states. This mimics the way synaptic connections in our brains strengthen or weaken based on activity.

Memristors often have a sandwich-like structure: a thin layer of an active material (e.g. a metal oxide like titanium dioxide) placed between two metal electrodes. Under an applied voltage, oxygen vacancies (missing oxygen atoms) drift within the active material. This movement changes the resistance, providing the memory effect.

Memristors are key to neuromorphic computing for several reasons. They can be miniaturized to extremely small sizes, allowing for dense neural networks on a single chip. They can also store and process data at the same location (within the memristor itself). This offers a huge potential speed-up over traditional computers where data shuttles between memory and processor. Last but not least, memristors conserve energy, ideal for low-powered edge devices.

Advantages of Neuromorphic Computing

We’ve already mentioned some of the advantages, but let’s get to the heart of the matter. Why is neuromorphic computing potentially better than current AI approaches? When and where would we want to use a neuromorphic chip, and why?

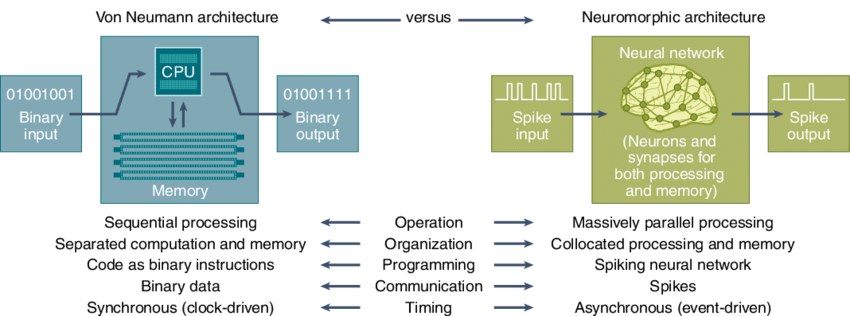

The von Neumann Bottleneck

Traditional computers are based on the von Neumann architecture. They have separate processing units (CPUs or GPUs) and memory units. Data needs to be constantly shuttled back and forth between them, creating a bottleneck that limits performance and efficiency. Think of it like a busy chef constantly running back and forth to the pantry to retrieve ingredients while trying to cook a complex meal.

Unlike von Neumann systems, neuromorphic chips try to integrate processing and memory within the same architecture. This eliminates the data transfer bottleneck and allows for more efficient information flow. This is also how our brains naturally work—we don’t have separate memory and processing centers. Instead, information flows seamlessly within a network of neurons.

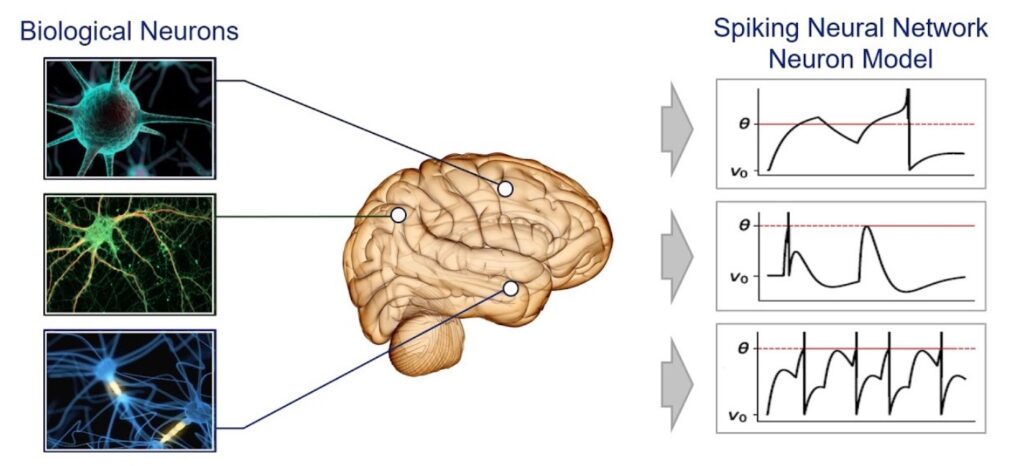

Spiking Neural Networks: Beyond 0’s and 1’s

Traditional computers and most AI models rely on a binary communication system: 0s and 1s. In contrast, the core neuromorphic information processing unit is called a spiking neural network (SNN). SNNs communicate with electrical pulses called “spikes.” Think of the neurons in our brains—they don’t just send on/off signals. They fire electrical pulses whose timing and frequency carry information. SNNs mimic this approach.

Spiking communication requires much less energy than constantly transmitting binary data. In addition, SNNs can encode information not just in the presence of a spike, but also in its timing relative to other spikes. This allows them to potentially process temporal patterns more efficiently than traditional AI. By incorporating spiking mechanisms, SNNs become closer to how biological brains actually work.

Key Advantages of Neuromorphic Systems

“Non-von Neumann” architectures, combined with SNNs, have several key theoretical advantages:

- Extreme Energy Efficiency: In-memory processing and spiking event-driven nature dramatically reduce power consumption compared to von Neumann architectures.

- Faster Real-Time Processing: Eliminating data transfer bottlenecks and leveraging efficient spiking communication enable faster processing for real-time tasks.

- Potential for New Learning Paradigms: Biologically-inspired learning mechanisms within SNNs offer the potential for more flexible and efficient learning compared to traditional AI models.

That said, it’s unlikely that neuromorphic computers would ever fully replace traditional computers. Just as with quantum computers, neuromorphic chips will be especially useful for certain problems—and less so for others. Generally, neuromorphic benefits increase as the problem complexity and number of sensors grow.

Applications of Neuromorphic Computing

Here are a few examples of where neuromorphic chips can shine:

Computer Vision in Robotics

Current robots often have a dedicated CPU/GPU for image processing and a separate unit for decision-making. A neuromorphic chip could combine low-power real-time vision with on-chip learning, enabling (1) adaptive navigation in unpredictable environments like construction sites or during disaster relief and (2) object recognition without massive training datasets, ideal for flexible manufacturing tasks.

Sensor Fusion for Wearable Health Devices

Wearables generate many noisy data streams (heart rate, motion, skin temperature). Small neuromorphic chips could (1) learn an individual’s baseline patterns, enabling highly personalized health monitoring and (2) detect subtle changes invisible to rule-based algorithms, potentially spotting illnesses earlier.

Adaptive Control for Prosthetics

Neuromorphic systems could analyze muscle signals or even tap into neural activity, allowing for (1) finer, more intuitive control of prosthetic limbs without extensive re-calibration and (2) prosthetics that learn and adjust to a user’s movement patterns over time.

Low-Energy, AI-Powered Edge Computing

Neuromorphic chips will also shine in edge devices that need to make complex decisions—e.g. autonomous vehicles. They’d be able to provide the “smarts” while (1) not requiring cloud connectivity, making them reliable in remote environments or disaster zones and (2) using a fraction of the power of today’s chips, extending battery life substantially.

These examples aren’t science fiction. They use existing sensors and algorithms—but would be simply more efficient or responsive with a neuromorphic chip. And as the technology matures, their huge efficiency gains will make neuromorphic chips attractive for an ever broader range of AI applications

Disadvantages of Neuromorphic Computing

Neuromorphic computing is a young field, and there are still many hurdles to overcome, from engineering to measurement.

Technical, Engineering, and Scaling Challenges

It’s no easy feat translating neuroscience to engineering. Our understanding of the human brain is still evolving, and we still can’t perfectly replicate its functions. Traditional silicon transistors cannot efficiently mimic the complex behavior of biological neurons, so researchers are still exploring alternatives like memristors.

Even manufacturing these chips at scale is still incredibly tough. Managing power delivery and heat dissipation at larger scales is a complex engineering problem. Today’s largest neuromorphic chips (like Intel’s Loihi 2) have around 1 million neurons. This is a far cry from the estimated 86 billion neurons in the human brain.

Programming Paradigm Shift: Synchronous to Asynchronous

Even if we were to build full-scale neuromorphic hardware, it wouldn’t be plug-and-play. Most traditional algorithms are designed for synchronous systems (CPUs/GPUs), where instructions are executed sequentially in a clock-driven manner. However, neuromorphic systems are inherently asynchronous. Neurons fire only when stimulated by input signals or based on internal dynamics.

This requires algorithms that can handle event-driven, spiking data. For example, a traditional deep learning algorithm analyzes an image pixel by pixel. Computations follow a precise sequence that we program from the outset. Even a GPU follows that sequence—it just runs many sequential threads in parallel. But a neuromorphic algorithm would process the image purely asynchronously. Different neurons would fire based on the patterns they detect, independently and at their own pace.

Lack of Apples-to-Apples Performance Benchmarks

Traditional computing has established benchmarks like FLOPS (floating-point operations per second) or frames per second in graphics. But the neuromorphic world still lacks standardized ways to measure and compare performance. For example, neuromorphic chips are not just about raw speed—energy savings is another key metric and reason for using them.

This lack of standardized benchmarks creates somewhat of a “chicken and egg” problem. Without clear metrics, it’s difficult to assess progress. But without provably superior performance, it’s harder to secure R&D funding, and in turn—adoption.

So Is Neuromorphic Computing the Future of AI?

Neuromorphic computing offers tantalizing promises – extreme energy efficiency, adaptive learning, and tackling real-world sensory data in ways that challenge traditional AI. However, it’s crucial to remember that we’re still in the very early stages of this technology. Manufacturing, programming, and benchmarking challenges all stand in the way of widespread adoption.

One bridge between the present and the future might be the development of hybrid systems. These combine traditional with neuromorphic chips, allowing each to play to its strengths. Neuromorphic cores could handle specific tasks requiring low-power, real-time processing, like smart city sensors or self-driving cars. Meanwhile, traditional CPUs or GPUs would still manage overall system control. As neuromorphic technology matures, the balance within these hybrid systems could shift.

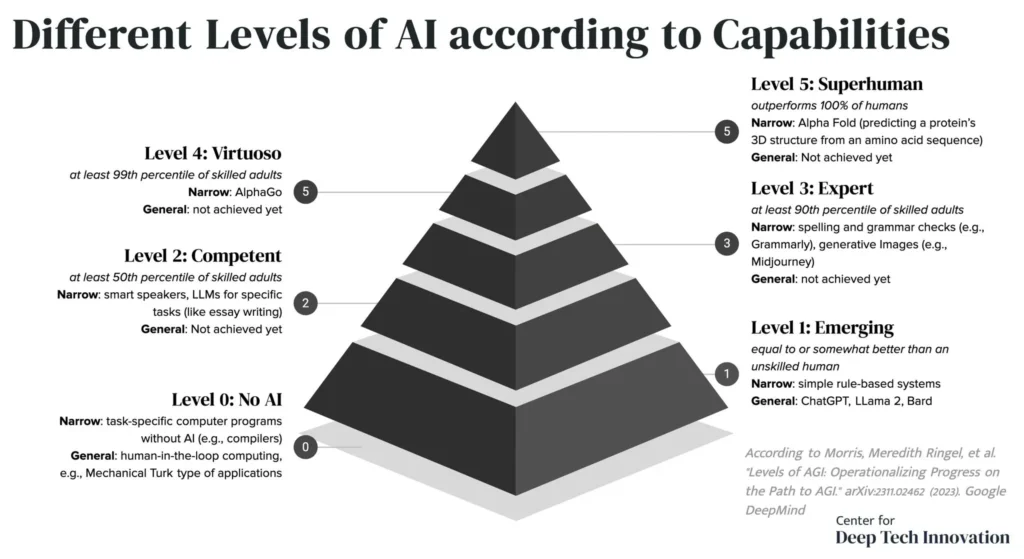

The ultimate question is whether neuromorphic computing can play a role in the pursuit of Artificial General Intelligence (AGI). Some researchers believe AGI is achievable with current paradigms, and it’s just a matter of scaling up existing models. They point to recent advances in generative AI that have forced us to rethink what we assumed about human creativity.

Still, other AI researchers don’t think current methods are enough. For example, some theories suggest true sentience is inseparable from the body and the environment. Current AI operates on abstract data models, while our brains evolved in the context of physical action and sensory perception. This argues for hardware specialized in handling real-world, noisy sensory data in real time—i.e. neuromorphic computing.

Regardless, neuromorphic AI will likely complement, rather than replace the AI approaches we have today. This is an extremely exciting field to watch, and plenty of startups are already pushing its boundaries. Whether neuromorphic computing ends up being a key puzzle piece for AGI—or “merely” used in niche cases like edge AI—it’s very worth keeping a close eye on.