AI infrastructure is the physical and foundational layer that turns electricity and silicon into intelligent software. It spans the specialized data centers built for accelerated computing; the GPU- and flash‑heavy servers that train and run models; the high‑speed networking and optics that knit thousands of chips into one computer; and the power and cooling systems that keep all of it running. If AI applications are the storefront, AI infrastructure is the factory—racks, pipes, and logistics—quietly determining what’s possible, how fast it happens, and at what cost.

In this report, we highlight the top AI infrastructure stocks to watch: data center picks-and-shovels winners in servers, storage, networking, cooling, and AI+HPC cloud.

Why AI infrastructure, why now?

The first tailwind is a capital‑spending super‑cycle. The largest buyers of compute are pouring unprecedented sums into AI capacity, and that spend flows directly into the picks‑and‑shovels vendors across servers, networks, and facilities. In July 2025, Microsoft told investors it expects more than $30 billion of capital expenditures in the coming quarter to meet cloud and AI demand—an all‑time high pace for the company. Alphabet lifted its 2025 capex target to about $85 billion, up from $75 billion earlier in the year, explicitly to expand AI and cloud infrastructure. Meta, for its part, guided to $66–$72 billion of 2025 capex, roughly $30 billion higher year over year at the midpoint, to fund AI data centers and compute. When the biggest buyers accelerate like this, their supply chains—server makers, optical module producers, power and cooling providers—stay busy.

The second tailwind is physics. Training and serving modern models concentrates power and heat at levels traditional data centers weren’t built for. The International Energy Agency projects global data center electricity consumption could roughly double by 2030 to around 945 TWh, with AI a primary driver. That pressure is forcing rapid upgrades: direct liquid cooling is moving from niche to necessary, with one industry survey showing 22% of operators already using it and 61% considering it. At the same time, the data pipes inside AI clusters are jumping a speed class, with industry forecasts expecting most AI back‑end switch ports to be 1600 Gbps by 2027—an optical and networking windfall.

Put together, AI infrastructure isn’t a single bet; it’s a system of bottlenecks being removed in sequence. As models scale and adoption grows, dollars flow toward whatever keeps GPUs fed, cool, and connected.

Specialized AI Data Centers

Specialized AI data centers are factories for intelligence, designed around GPUs, high-density racks, and advanced cooling. They rent compute as a product, turning power and real estate into training throughput. Their edge is speed: cheap power and custom buildouts that beat hyperscalers’ queues. Among AI infrastructure stocks, these operators monetize the scarcity of compute, with long-term contracts that lock in demand as the arms race continues.

CoreWeave (NASDAQ: CRWV)

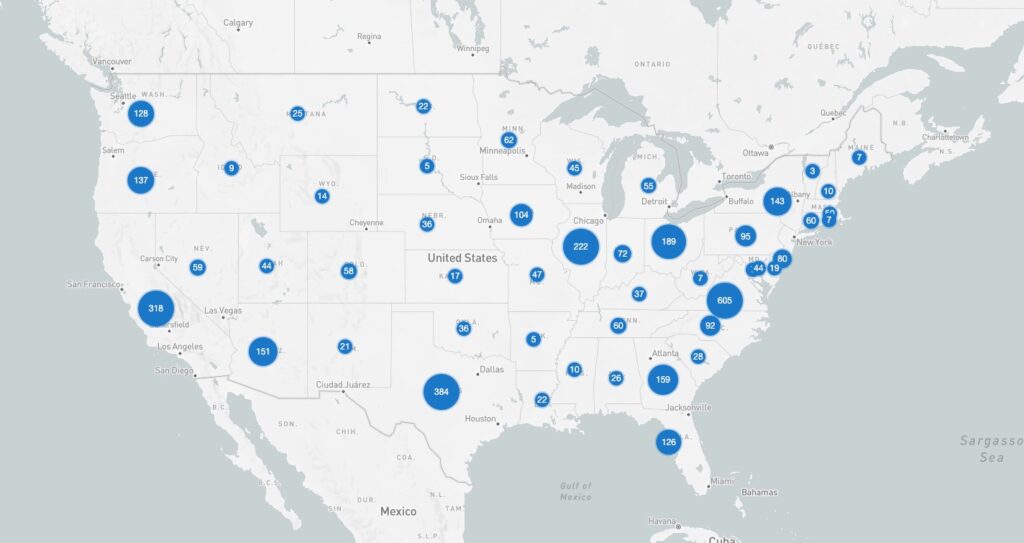

HQ: USA; Specialized GPU cloud and AI data center operator.

CoreWeave began as a cryptocurrency miner that pivoted to become a pure-play AI cloud provider. By repurposing its trove of GPUs from mining, it transformed into a go-to platform for renting advanced computing power. Today, CoreWeave runs specialized data centers packed with cutting-edge Nvidia chips, offering on-demand capacity for training and deploying AI models at scale. Its strategy is to outmaneuver the tech giants not on breadth of services, but through laser focus on high-performance GPU infrastructure, including custom networking and cooling tuned for machine learning loads. This focus has made CoreWeave a critical behind-the-scenes player: it inked a five-year, $12 billion contract to provide cloud compute for OpenAI, and built an AI supercomputer that Nvidia touted as the world’s fastest.

For investors, CoreWeave represents the rise of boutique “AI hyperscalers” that fill the gap left by traditional clouds’ capacity constraints. As demand for ChatGPT-like services exploded, even Google turned to CoreWeave’s ready-made GPU clusters to quickly scale up AI work. CoreWeave’s early bet on GPUs and deep specialization have made it a cornerstone of the AI infrastructure boom, differentiating it as an agile, purpose-built alternative to the usual hyperscalers.

Applied Digital (NASDAQ: APLD)

HQ: USA; Builder-landlord of high-density AI data centers.

Applied Digital has reinvented itself from a crypto mining host into a builder of “AI factories,” next-gen data centers designed specifically for power-hungry computing. After pivoting in 2022 to focus on high-density infrastructure (even rebranding from “Applied Blockchain” to signal the shift), the company now delivers bespoke facilities with features like liquid cooling and strategic locations for cheap. Instead of operating a cloud service directly, Applied Digital leases these turnkey AI data centers to cloud providers and tech firms that need to scale up quickly. This model was validated by a landmark deal: two 15-year leases with CoreWeave, expected to generate about $7 billion in revenue.

In essence, Applied Digital’s value lies in doing the heavy lifting of building and powering AI-ready data centers so its clients don’t have to. By rapidly deploying facilities in cooler climates (like North Dakota) alongside advanced cooling tech, it helps alleviate the physics bottlenecks in AI compute. By acting as the landlord and engineer for the AI boom, Applied Digital is positioned a unique picks-and-shovels play in AI infrastructure. Its long-term, volume contracts provide stability while the overall demand for AI hosting continues to surge.

IREN Ltd (NASDAQ: IREN)

HQ: Australia; Renewable-powered data centers pivoting to GPU hosting.

IREN (formerly Iris Energy) is an Australian-founded firm that started as a Bitcoin miner and is now repurposing its clean-energy data centers for the AI era. The company operates facilities powered entirely by renewable energy (hydro and wind), turning cheap electricity into digital compute capacity. In 2024, as crypto mining economics tightened, IREN began deploying Nvidia GPUs to rent out high-performance computing. By mid-2025, it had about 4,300 GPUs online for AI workloads alongside its ongoing Bitcoin operations. It has already signed initial AI cloud contracts and is building out new liquid-cooled data center sections in Texas and British Columbia capable of housing up to 20,000 GPUs for AI and HPC clients.

IREN’s story fits into the broader trend of energy-intensive crypto miners pivoting to serve the surging demand for AI computation. The company’s calling card is its abundant, low-cost power and ready infrastructure; it has over 2.9 gigawatts of power capacity secured across its sites for future growth. This enables IREN to offer AI infrastructure that’s not only scalable but also sustainably powered, appealing to organizations mindful of carbon footprints. While still in early stages of its AI transition, IREN provides a unique mix of green energy and high-performance computing.

Northern Data (ETR: NB2)

HQ: Germany; European GPU supercomputing with liquid-cooled data centers.

Northern Data has morphed from a cryptocurrency miner into one of Europe’s HPC powerhouses. Through its Taiga Cloud division, it owns one of Europe’s largest GPU supercomputer clusters, a fleet of cutting-edge Nvidia processors that it invested heavily to secure (over €700 million spent on H100 GPUs). Northern Data pairs this compute muscle with its Ardent Data Centers arm, which operates advanced facilities featuring liquid cooling and high-density power design. It even recently opened a 20 MW AI data center in Pittsburgh with direct-to-chip cooling and a target PUE ~1.15, supporting extremely high rack densities. Across Europe and North America, the company is developing sites with a potential 850 MW of capacity, positioning itself as a go-to provider for AI infrastructure.

Northern Data pursues a full-stack approach. It not only builds and operates data centers but also provides on-demand access to GPU clusters for AI developers. By leveraging its crypto-mining roots, it had capital and expertise to pivot early into AI; Nvidia even confirmed that Northern Data runs Europe’s largest AI hardware cluster. That means Northern Data is also positioned to benefit as Europe seeks sovereign AI infrastructure, giving U.S. investors international exposure to the AI build-out.

AI-Optimized Servers & Storage

AI-optimized servers and storage are the muscle and metabolism of modern AI clusters. GPU-dense racks do the math; flash arrays keep them fed so nothing starves waiting for data. These companies ship integrated systems—compute, networking, and cooling—that cut deployment from months to weeks. For AI infrastructure stocks, this segment captures direct spend that turns capital into training throughput, compounding with every new model and workload.

Super Micro Computer (NASDAQ: SMCI)

HQ: USA; Fast-cycle, rack-scale GPU servers.

Supermicro is a Silicon Valley hardware maker that has emerged as an unsung winner of the AI boom. Historically known for motherboards and server components, it reinvented itself by delivering whole racks of AI-optimized servers faster and more flexibly than larger rivals. When cloud giants and research labs needed to deploy hundreds of Nvidia GPU systems, Supermicro’s agile engineering and manufacturing allowed it to meet those orders in weeks instead of months. It builds fully integrated “AI racks” – complete with the latest GPUs, high-speed networking, and even liquid cooling – that customers can simply plug into their data centers to scale up machine learning capacity quickly.

This rack-level focus is Supermicro’s key differentiator. Rather than selling just individual servers, the company pre-configures entire AI infrastructure blocks, even installing software and networking, to save clients time. That extra level of service has created a virtuous cycle of repeat business. Supermicro’s close relationships with chip makers (it’s reportedly even assembling Nvidia’s own AI supercomputers behind the scenes) have helped it stay on the cutting edge of AI hardware availability. As AI adoption continues, Supermicro’s ability to quickly deliver high-performance, custom solutions has allowed it to outpace incumbent server vendors in this niche.

One Stop Systems (NASDAQ: OSS)

HQ: USA; Rugged GPU compute and storage for AI at the edge.

One Stop Systems builds high-performance computers that can go where traditional data centers cannot. Its specialty is “AI on the edge” – designing ruggedized servers and storage for military vehicles, aircraft, and remote industrial sites. These are places where AI algorithms increasingly need to run (for example, analyzing sensor feeds on a drone or tank in real time), but the hardware must fit into limited space while withstanding harsh conditions: shock, vibration, and temperature extremes. OSS addresses this by engineering compact systems that pack the latest GPUs and flash storage into durable, mobile units. In essence, it brings data center-class AI computing power out to the tactical edge.

In contrast to mainstream server makers, OSS has a singular focus on extreme-edge use cases. The company has secured multiple defense contracts, including a recent $6.5 million deal to supply AI-driven tactical servers for a U.S. Department of Defense program. It also enjoys a partnership with Nvidia as a tier-2 OEM, giving it early access to top-tier chips for these specialized designs. As AI applications expand beyond cloud data centers into battlefield intelligence, autonomous vehicles, and other remote settings, OSS is positioned as a key enabler.

Pure Storage (NYSE: PSTG)

HQ: USA; All-flash storage that keeps AI clusters fed.

Pure Storage provides the high-speed data foundation that keeps AI systems fed with information. In AI model training, powerful GPU servers can crunch data at astonishing rates, but only if the storage can supply data just as fast. Pure’s all-flash storage arrays are built for this era of massive data throughput. Over 90% of cloud data is still stored on slower hard drives today, yet modern AI workloads demand low-latency, high-bandwidth storage – a secular tailwind for Pure’s flash solutions. Its flagship FlashBlade systems, for example, can deliver tens of gigabytes per second of read throughput, preventing expensive GPUs from sitting idle waiting for data. In effect, Pure’s technology removes bottlenecks in the AI data pipeline.

Pure Storage has partnered with Nvidia to create AI-Ready Infrastructure (AIRI), combining Pure’s FlashBlade storage with Nvidia’s DGX AI servers as an integrated solution for enterprises. An A-list of tech giants – including Meta, Alphabet (Google), and Salesforce – rely on Pure’s arrays to store and serve their AI training data. Like many other AI infrastructure stocks, Pure is a picks-and-shovels play: as organizations ramp up AI capabilities, many are upgrading from legacy storage to Pure’s flash systems to avoid hindering their shiny new AI clusters.

High-Speed Networking & Interconnect

High-speed networking and interconnect is the nervous system of AI: it links thousands of GPUs so they act like one computer. The jump from 100G to 400G and 800G, plus optical modules and low-latency fabrics, determines how fast models train and serve. Within AI infrastructure stocks, these companies sell the bandwidth that unlocks cluster efficiency—small drops in latency can yield outsized gains in compute.

Arista Networks (NYSE: ANET)

HQ: USA; Cloud-class Ethernet switching for AI superclusters.

Arista Networks supplies the ultra-fast switches and networking gear that tie together modern AI supercomputers. Training advanced AI models often involves thousands of GPUs working in parallel, which demands enormous data throughput within and between server racks. Arista has become a key beneficiary of this trend: companies building AI infrastructure have been rapidly upgrading their data center networks as well, lifting demand for Arista’s 400 Gbps and 800 Gbps Ethernet switches. The firm’s cloud networking pedigree – it’s a leading supplier of high-performance switches to hyperscalers like Microsoft and Meta – positioned it perfectly for the AI era’s explosion of “east-west” data center traffic.

Arista’s network operating system (EOS) and switch designs are known for high throughput and low latency, which customers leverage to knit together AI clusters spanning thousands of nodes. While some AI deployments use specialized interconnects (such as InfiniBand), Arista champions Ethernet as a flexible, standards-based alternative that can scale broadly. It has even formed a collaborative “AI networking” trio with Nvidia and Celestica to advance Ethernet solutions for AI data centers. In essence, whenever a cloud provider or research lab stands up a new AI supercomputer, Arista’s gear is contending to handle the deluge of data flowing between GPUs.

Ciena (NYSE: CIEN)

HQ: USA; Coherent optical systems for high-capacity data center interconnect.

Ciena is a leading maker of the fiber-optic networking equipment that moves data between cloud data centers at light speed. In the age of AI, data center interconnects and internet backbones are straining under a deluge of machine-generated traffic. Training AI models involves shuttling petabytes of data, often between geographically separated facilities, while AI-powered services are driving up bandwidth usage across the board. Ciena’s advanced optical transport systems (like its WaveLogic coherent optical platforms) enable these huge data pipes – for example, 800 Gbps and now 1.6 Tbps wavelengths over a single fiber. Telecom carriers and cloud giants alike have been investing to upgrade their networks.

Whenever you hear about a new AI supercluster or a cloud region expansion, there’s a parallel effort to beef up the fiber links that connect those computing hubs. That’s where Ciena comes in, and the company is already benefiting as AI accelerates a broader upgrade cycle from 100G to 400G/800G optical links in networks. Also, as more former bitcoin miners pivot to providing AI data centers, all that distributed compute will need robust connectivity. Ciena’s latest generation of optical “highways” for shuttling data positions it well to capture those budgets.

Coherent (NYSE: COHR)

HQ: USA; Optical modules, lasers, and silicon photonics for AI networks.

Coherent is a leader in the photonics technology that underpins modern AI connectivity. As AI models grow larger and dataflows skyrocket, the industry is looking for faster, more efficient ways to move information between and even within machines. Coherent (the new entity formed after II-VI acquired the legacy Coherent) designs and manufactures the lasers, optical modules, and silicon photonics that make ultra-high-speed communication possible. For instance, Coherent has developed cutting-edge 1.6-terabit optical transceivers that incorporate advanced Nvidia-designed signal processors. These tiny devices can blast huge volumes of data over fiber at distances of up to 500 meters, enabling AI superclusters to function as a unified machine.

Coherent’s differentiation lies in its deep vertical integration in optics, allowing it to innovate at the very frontier of data transmission. It is collaborating with Nvidia on new “co-packaged” optics – essentially building optical interfaces directly into switch chips – to connect future “AI factories” of GPUs with even less latency and power loss. As data center networking speeds continue to increase (the move from 400G to 800G and beyond), the importance of optical links grows in tandem. Coherent, as a key supplier of the photonic engines enabling these speeds, stands to benefit across the board.

Fabrinet (NYSE: FN)

HQ: Thailand; Precision manufacturer for optical/electronic components.

Fabrinet doesn’t build its own AI chips or data centers, but it does build essential parts for those who do. This Thailand-based company is a specialist in precision manufacturing for tech hardware, and it has quietly become indispensable in the AI supply chain. Fabrinet’s factories produce the tiny optical and electronic components that go into products like high-speed transceivers, laser arrays, and advanced circuit boards. Nvidia is one of Fabrinet’s largest customers, reportedly accounting for roughly 35% of Fabrinet’s recent sales. Networking firms like Cisco and optical tech companies like Lumentum are also major clients. Essentially, many of the big names building AI systems outsource critical assembly and packaging work to Fabrinet.

What sets Fabrinet apart is its focus on the hard-to-make pieces of AI infrastructure. As the AI boom drives demand for things like 800G optical modules and sophisticated server interconnects, many OEMs rely on Fabrinet to ramp up production with high quality. The company boasts a 99.7% manufacturing precision rate, meaning its assembly lines produce highly complex photonic devices with near-zero defects – a key reason tech giants trust it with their cutting-edge products. Whenever an AI data center is being built or an AI device is being assembled, Fabrinet’s factories are likely humming in the background.

Data Center Power & Cooling

Data center power and cooling is the plumbing that makes the AI factory safe and reliable. GPUs draw immense electricity and throw off heat that air alone can’t handle, pushing operators toward liquid cooling, new power distribution, and smarter controls. Among AI infrastructure stocks, this segment benefits every time densities rise; without it, performance throttles and downtime creeps in, regardless of how many chips a customer buys.

Vertiv (NYSE: VRT)

HQ: USA; Power, cooling, and modular AI data center infrastructure.

Vertiv is in the business of keeping data centers running. Training AI models like the GPT series draws so much electricity and generates so much heat that conventional data center infrastructure is being pushed to its limits. Vertiv, a leading provider of power and cooling systems, has responded by rolling out new solutions optimized for these “AI factories.” In 2025, it unveiled a powerful suite of offerings spanning unified power/thermal management software, prefabricated high-density modules, next-gen liquid cooling, and advanced power distribution – all aimed at addressing the “soaring densities” and speed-of-deployment needs of AI data centers.

For example, Vertiv’s latest cooling units include a rear-door heat exchanger capable of removing up to 80 kW per rack (a clear nod to the heat profile of GPU racks). It has also introduced new DC power busways and a modular “overhead” infrastructure system to accelerate building out AI capacity. Vertiv’s strength is its end-to-end portfolio; it’s one of the few vendors that can deliver the entire physical infrastructure for an AI data center – from uninterruptible power supplies and room-scale cooling units down to rack-level power strips and liquid-cooling kits. As companies build or retrofit facilities for AI, Vertiv is a go-to provider to make those sites “AI-ready.”

Asetek (CPH: ASTK)

HQ: Denmark; Direct-to-chip and rack-level liquid cooling for AI servers.

Asetek is a small but focused player addressing one of AI’s thorniest challenges: heat. The company is a pioneer of liquid cooling technology. Gamers and PC enthusiasts might know Asetek for its all-in-one water coolers, but its focus in recent years has shifted to data centers and high-performance computing. The reason is simple – air cooling is no longer sufficient as AI hardware pushes rack power densities to unprecedented levels. High-density AI clusters are expected to run at 100% utilization for sustained periods, making cooling a new bottleneck. Asetek provides direct-to-chip (D2C) liquid cooling solutions that replace or augment traditional air heatsinks, directly removing heat at the source (the CPU/GPU).

Asetek has singular expertise in liquid cooling. It designs pump-and-coldplate systems that can be dropped into standard servers or integrated at the rack level (its RackCDU™ units) to enable efficient water cooling. By circulating coolant right to the hottest components, Asetek’s technology allows data centers to operate AI processors at full tilt without thermal throttling, all while using much less energy. Switching to liquid can markedly improve a facility’s power usage effectiveness, and major server OEMs have tested and adopted Asetek’s solutions.

nVent (NYSE: NVT)

HQ: UK; Racks, power, and retrofit liquid-to-air cooling for AI data centers.

nVent Electric is another picks-and-shovels beneficiary of the AI data center buildout, focused on the physical side of computing infrastructure. Historically known for electrical enclosures and cooling racks, nVent has reinvented a big part of its business around liquid cooling for high-performance computing. nVent offers solutions like hybrid liquid-to-air cooling units that can be retrofitted into standard server rows, boosting cooling capacity without a complete facility overhaul. For example, its Liquid-to-Air “sidecar” units and rear-door heat exchangers allow data centers to deploy liquid cooling at the rack level to support high-density AI racks, even if the building doesn’t have a central water cooling loop. This helps older data centers handle new AI hardware without rebuilding from scratch.

In essence, nVent helps bridge the gap between legacy infrastructure and new requirements. It makes sure the “nuts and bolts” of data centers (power, cooling, physical support) catch up to the cutting-edge servers being rolled in. But it’s not doing this in a vacuum; nVent co-developing solutions directly with the giants of tech. It works closely with Nvidia, AWS, Microsoft and others to design custom cooling setups for each new generation of AI hardware.