What will happen when software “puts the universe in a shoebox?” When your computer screen shows a lifelike recreation of your entire city. And you see all the moving traffic. Every live event taking place. All the houses that are for sale. What would you do?

That was the idea behind Mirror Worlds (1992), a prophetic book by Yale C.S. professor David Gelernter. He described these expansive digital replicas as “high-tech voodoo dolls.” Deeply linked with reality, changes in one reflect in the other. Now, thirty years later, that’s exactly what companies are trying to create… so-called “digital twins.”

In this no-BS explainer, we’ll break down exactly what digital twins are and how they’re used today. But more importantly, we’ll explore the future technologies they’ll enable, such as smart cities and the metaverse.

What exactly is a digital twin?

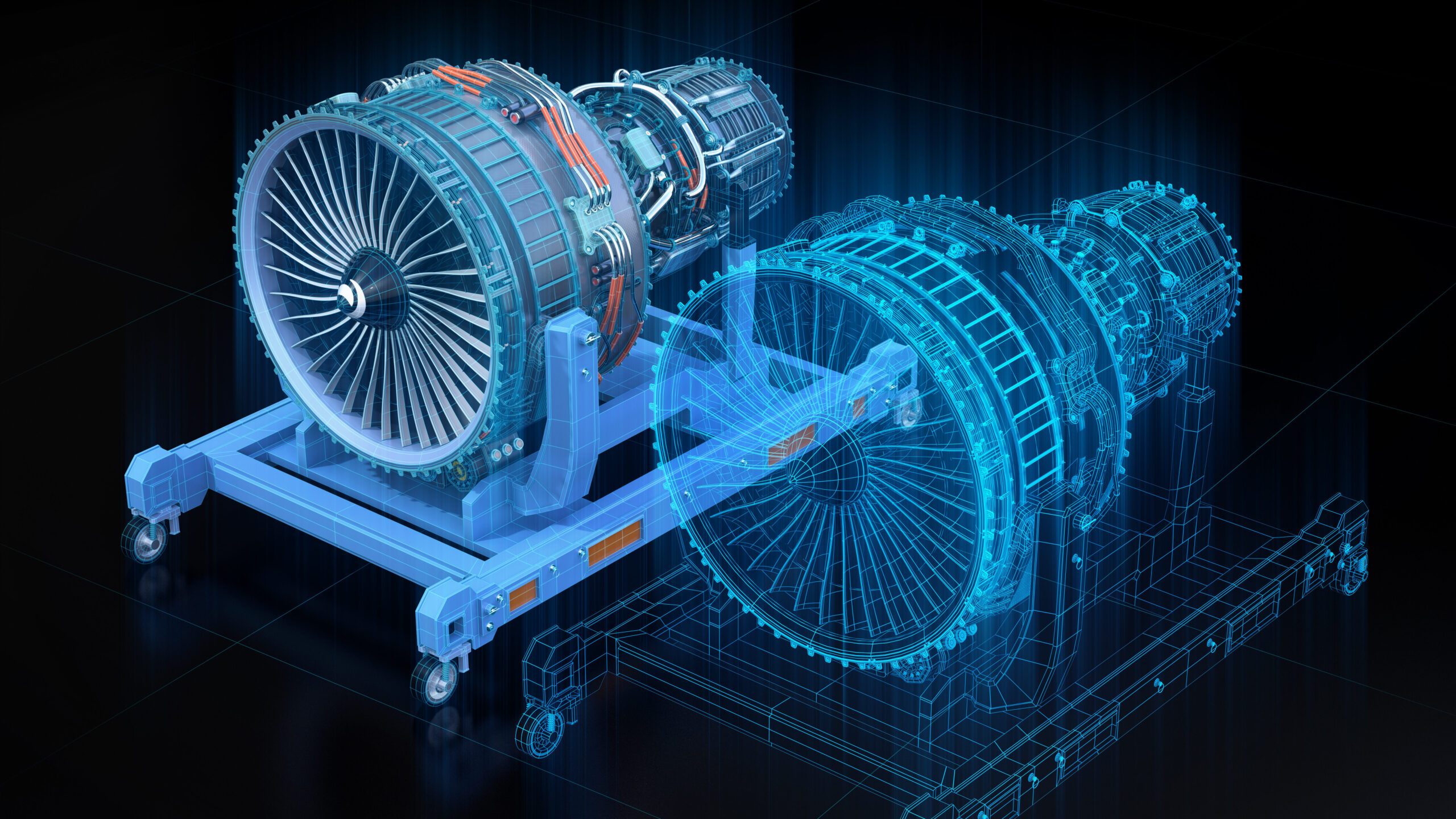

Simply put, digital twins are virtual replicas of physical objects. That could include a car, a person, or even an entire city. But a digital twin is much more than a 3D rendering. A 3D model alone is not enough to be a digital twin.

A digital twin implies much more functionality. For example, a digital twin mirrors real-time changes in the physical object. It can also interact with other digital twins in a shared virtual setting. These two key differences open up a world of possibilities for this technology.

Why are digital twins so useful?

Let’s do a thought experiment. Assume you could create a digital twin for anything you want. It looks like the real thing. It acts like the real thing. But above all, it reacts to external forces—heat, cold, chemicals, etc.—just like the real thing.

Great. Now, let’s put on our supervillain hats. We could throw our digital twins into all kinds of unsavory scenarios. For example, let’s say you want to test the design for a new pickup truck. Want to see if it crumbles in a car crash? Or if it can survive a severe blizzard? Or if it can plow through hordes in a zombie apocalypse?

Well, with digital twins, we could answer those questions from the safety of a computer screen. We wouldn’t need to spend millions on trial-and-error in the real world. Instead, we’d make a few tweaks to our scenario, and the consequences would appear on screen. Think The Sims, but turned up to 11.

Who uses digital twins right now?

To be clear, we’re nowhere near the point of being able to create true “digital doppelgängers.” Still, even today’s rudimentary digital twins are already very useful. They’re especially valuable in fields with high costs of failure.

For example, NASA famously used digital twins in the Apollo missions. While they didn’t use the term back then, each Apollo spacecraft had its own digital twin. So when Apollo 13’s oxygen tank exploded 210,000 miles from Earth, mission control was ready.

NASA used cutting-edge telecommunications technology to pass data back from the spacecraft. That data was then used to modify its digital twin to reflect the craft’s crippled state. NASA was able to diagnose and solve the problem remotely—and bring the astronauts home safely.

Digital twins are also used in the automotive industry. They can help simulate crash tests before risking real vehicles. They’re also used in the design stage and to monitor cars for predictive maintenance. In fact, every Tesla sold today comes with its own digital twin.

But it’s not only engineering that can benefit from digital twin technology. Retail and banking companies have begun using them to simulate all the steps of a customer’s journey. With this data, they can personalize their customer service on a 1-to-1 basis. This is so promising that there’s now a dedicated (albeit not very creative) term for it: Digital Twin of Customer.

Digital twin vs. simulation: what’s the difference?

Simulations are nothing new. So is a “digital twin” just marketing-speak for a fancier simulation? Well, yes and no. Yes, they do fall under the broad idea of a simulation. But as we alluded to earlier, there are two key differences. In practice, digital twins have two main improvements over traditional simulations.

First, digital twins use real-time data.

Unlike traditional simulations, digital twins constantly update using real-time data. They rely on sensors of all different shapes and sizes—a.k.a the “Internet of Things.” Thus, digital twins can be used in a variety of time-sensitive problems.

For example, Lockheed Martin is currently developing a digital twin of the Earth’s atmosphere. It will visualize environmental conditions and track extreme weather events around the world. Live data from satellites, weather balloons, and radar will feed into real-time updates. This “Earth twin” will help scientists make more accurate forecasts globally.

Second, digital twins can operate at a much greater scale.

Complex systems often encompass thousands—if not millions—of moving parts. Traditional simulations cannot model the trillions of possible permutations. You might be able to simulate one robotic arm on a factory floor. But good luck simulating an entire factory of robots, workers, parts, and processes. At that scale, there are far too many states to model at once, even for a supercomputer.

However, digital twins can behave more like Lego blocks. You can create a digital twin of each individual component or machine. That keeps the individual complexity manageable. But when you combine all the individual twins, you get a larger macro-twin. That macro-twin is modular, and can grow as more pieces are added. The sum becomes greater than the parts.

Why should you care about digital twins?

It’s tempting to write off digital twins as just another industrial tool. But they actually have far-reaching implications, even for your day-to-day life. Here’s how.

Digital twins will be the backbone of smart cities.

In 2022, Singapore created a 1:1 digital twin of its entire city, divided into 728,000,000 square meter tiles. Using scanning lasers from planes, the entire city was mapped in under two weeks. And unlike previous 3D models, this digital twin updates with real-time data from drones and sensors throughout the city. It’s a complete framework for geospatial data.

So what’s the point of having a digital twin of an entire city? Well, we’re still scratching the surface of possibilities. But to name a few:

1.) Digital twins can protect city infrastructure 24/7.

They need no sleep or lunch break, and can catch a burst pipe or gas leak instantly. When combined with AI, they can also help predict needed repairs ahead of time. This would prevent costly maintenance surprises and keep residents safer.

2.) Digital twins give urban planners a risk-free sandbox.

According to UN projections, over two-thirds of the world will live in cities by 2050. City planners have a tall task ahead, but digital twins will be there to help. By simulating their projects before building them, planners can avoid unintended consequences. They can predict and visualize how the project will affect traffic flow, retail access, and sustainability.

3.) Digital twins can reroute traffic and respond to accidents.

When it comes to speed, responsiveness, and precision… we’re living in the past. Quite literally, everything we do right now is backward-looking. For example, only after a traffic accident clogs up an intersection do we send troopers and put up “detour” signs.

But digital twins can help us look forward instead. With the help of AI crunching through all the data, we could predict problems in advance. For example, we could predict high-risk areas during a snowstorm and divert traffic away before an accident occurs. Or in the event of a disease outbreak, public health AI could warn residents of hot zones to avoid.

4.) Digital twins can aid in disaster mitigation.

We’d no longer need to approach catastrophic events “blindfolded”. Using data-rich digital twins, AI could forecast the aftermath of an earthquake—down to the street level. This clairvoyance would allow officials to rapidly devise contingencies and evacuation routes.

5.) Digital twins will enable other smart city technologies.

The “smarts” in a smart city must be able to coexist with humans and dozens of other systems. For example, self-driving cars need to know about other cars on the road. Smart energy grids need to track usage rates across the city. Delivery drones need to dodge power lines… and so on. All these applications need data about their surroundings, which digital twins can provide.

Digital twins will manage fleets of autonomous vehicles.

Amazon is already using fleets of autonomous robots to speed up fulfillment. They operate in controlled environments, like warehouse floors. But what if you want to deploy them to remote corners of the world? Or if you need them to adapt to unfamiliar environments?

That’s where digital twins could help bridge the gap. There’s a subfield of robotics called “swarm robotics.” The idea is that a network of small robots can tackle tasks that individual ones cannot. Each robot takes on a small part of the task, so if one fails, there are others ready to pick up the slack.

For example, a swarm of autonomous boats could clean up toxic waste or monitor the vast ocean. The U.S. Navy is pursuing that very idea for harbor defense. Swarm robots are also promising for agriculture, emergency response, and supply chain.

But for robotic swarms to work, they’ll need to synchronize their actions in a shared operating environment. This is where digital twins would come in. Sensors on the robots would send rich data back in real-time. Each digital twin then gets updated with its robot’s location, status, and objectives. This would enable robots to cooperate in close proximity without clashing. And if one robot malfunctions, the other members of the swarm would even be able to help repair or recover it.

Digital twins will guide “Peripherals” on the harshest jobs.

Imagine humanoid robots that have digital twins, which are then “connected” to skilled humans. This is a concept explored in William Gibson’s science fiction novel The Peripheral (now a TV show). These lifelike remote-controlled robots would have many practical uses.

To start, these robots could tackle jobs in extreme, variable, and dangerous conditions. In search and rescue missions, human operators could control the robots from a safe zone. The robots might even have extra sensors that detect heat or sound to locate survivors. They would also be ideal for dangerous jobs like power line repair, oil rig maintenance, and biohazard cleanup.

Of course, we could also send these “peripherals” out to space. Ventures like orbital manufacturing or asteroid mining can benefit from human finesse… but they’re too dangerous to risk human lives. Instead, we can send robots. When carrying out routine tasks, the robots can be AI operated. But when things go south—unexpected debris, hardware malfunction, alien invasion, etc.—an expert human operator can step in.

At this point, you may be thinking: this sounds too far-fetched. But guess what? We already have products that are moving toward this idea. The da Vinci Surgical System is a set of robotic surgery arms that are controlled by a human surgeon. It has been used in over 8 million surgeries since 2000. Compared to traditional surgeries, it offers greater precision and accuracy. That leads to smaller incisions, less tissue damage, and shorter hospital stays.

These “robotic-assisted” tools will naturally progress toward greater range of motion. Start with a robotic arm… add in a torso… some other limbs… and so on. Pretty soon, we’re going to want full body control, and digital twins will serve as the bridge. And at that point, we’d have created a “peripheral.”

Digital twins will help populate the metaverse.

The term “Metaverse” was originally coined in Snow Crash (1992) by Neal Stephenson. In the novel, the protagonist Hiro and his roommate share a 20×30 ft U-Stor-It container near L.A.’s slums. It features a “concrete slab floor” and “corrugated steel walls.” But that’s only in the real world. In the Metaverse, Hiro is a “warrior prince” who lives in a mansion and gets invited to exclusive clubs.

While fantastical, Snow Crash illustrates the appeal of a virtual world. It lets us explore and experience things that are infeasible in our day-to-day lives. For example, in the metaverse, you could…

- Sightsee in 10 different countries over a weekend, without paying for airfare.

- Float through a virtual landscape filled with exotic and extinct animals.

- Experience what it would be like to live in the Roman Empire.

- Walk around on the Moon, or go sledding in its craters.

- Share a dinner table with family members on the other side of the globe.

- Compete in a massive scavenger hunt with millions of other players around the world.

- Travel to another universe with different laws of physics.

- Design your own city and watch it come alive as other users visit.

- Dive deep underwater and explore the wonders of the Marianas Trench.

At this point, you may be wondering, “these sound cool, but who’s going to build all these worlds?” Well, that’s a great question. Because it would be impossible for developers to program each from scratch. It would be too expensive, time-consuming, and complicated.

That’s where digital twins come in. They would allow developers to quickly replicate real-world objects in the virtual world… from a bicycle to an entire city (like Singapore’s). They could then alter, duplicate, or combine the digital twins in creative ways. Thus, digital twins could serve as building blocks for the metaverse.

But let’s not stop our imaginations there. In the early days of television, professional studios produced everything. But today, you can go on YouTube and watch billions of user-generated videos. Many were filmed using smartphones, and are more entertaining than high-budget shows. Nothing is stopping that same type of creator economy from emerging in the metaverse.

Put another way: The first computers filled up entire rooms. But now our smartphones are more powerful than the supercomputers used to get us to the moon. One day, digital twin technology will also fit in the size of our palms.

At that point, users will be able to turn almost anything into a digital twin. That’s how we’ll populate the ever-expanding metaverse. The metaverse will be an outlet for creativity as much as it will be a means to escape the mundane.

What’s next for digital twins?

Right now, we’re definitely past the phase of, “ok—it’s basically a glorified 3D model.” Even with today’s software, we can create a digital twin of the International Space Station and track its orbit. The US Air Force is building digital twins of the F-16. Police departments are using them to map out crime scenes. And businesses like Lowe’s are creating virtual versions of their stores for customers to explore.

Even so, there’s still a lot of work to do before we can make actual “digital doppelgängers.” The big hurdle right now is figuring out a way to replicate properties that can’t be measured directly. For example, we can’t build sensors tiny enough to measure a microscopic crack in an airplane hull. So the next phase of digital twin progress will rely on AI and quantum computers that can “fill in the gaps.”

But rest assured, as we continue to fill those gaps, digital twins will continue to get more lifelike. And with a blossoming IoT and metaverse, true “mirror worlds” are not too far distant.