Edge AI aims to bring artificial intelligence directly to devices, no cloud connection required. This not only enables faster decisions, but it also protects sensitive data by keeping it on-device. Imagine a world where your car makes split-second decisions to avoid accidents. Or where your smartphone understands your commands even without an internet connection. This is the promise of edge AI, and it’s bringing a major paradigm shift. In this guide, we examine the top edge AI stocks for 2024, ranked by pure-play focus. The stocks are split into three tiers, each representing a different level of commitment to edge AI.

Note: We make every effort to keep our information accurate and up-to-date. However, technology markets do move fast and company situations can change rapidly. Please use this guide as an introduction to the edge AI landscape; but ultimately, do your own due diligence before taking action.

Tier 1: Pure-Play Edge AI Stocks

This tier represents the vanguard of edge AI stocks. These companies have placed their bets squarely on the future of edge artificial intelligence. If you’re looking for undiluted exposure to the edge AI market, these stocks offer the most direct path.

Ambarella (AMBA)

Ambarella (AMBA) is a name that stands out in edge AI, and for good reason. What makes Ambarella so interesting is their laser focus on integrated computer vision chips. Computer vision is the subfield of AI that’s all about helping machines see and interpret the world. As you might guess, computer vision is vital for many edge machines, such as security cameras, self-driving cars, and drones. All these devices would enjoy having AI capabilities without needing to send data back to the cloud for processing.

Now, you might be thinking, “Surely there are bigger companies working on this kind of technology?” And you’d be right. But here’s where Ambarella shines: they’ve packed a ton of processing power into chips that sip power like it’s a fine wine. This energy efficiency is huge for edge devices, which often can only fit tiny batteries.

Ambarella’s secret sauce is their CVflow architecture (“Computer Vision flow”). This is a bespoke chip design that’s optimized for the kinds of calculations needed for AI and computer vision. Their CV3-AD685 chip, for example, is a beast for advanced driver-assistance systems (ADAS) and autonomous vehicles. This chip is fast, clocking in at 500 tera operations per second (TOPS). For context, just a few years ago, automotive AI chips were struggling to reach 30 TOPS. It also supports multi-sensor perception, including high-resolution cameras, radar, and lidar.

What’s potentially most reassuring for investors is that Ambarella has its fingers in a lot of edge AI pies. They’re not just focused on one industry. Their chips are finding homes in both consumer products and industrial use cases. This diversification helps protect them from downturns in any single market and gives them multiple win conditions.

That said, the semiconductor industry is notoriously cyclical. Ambarella is also competing with serious heavyweights like Qualcomm and Nvidia—two other players on this list. But for investors looking to get focused exposure to edge AI, Ambarella is a top pure-play option. And unlike some of the more speculative startups in this field, Ambarella has a proven track record of real, shipped products.

Lantronix (LTRX)

Lantronix (LTRX) is an intriguing player in the edge AI space, and its inclusion in Tier 1 makes sense when you look at their trajectory. This company has been around since 1989, but in recent years, they’ve pivoted hard into the IoT and edge computing world. In some ways, they’re the dark horse in the edge AI race.

Lantronix actually has two key strengths in the edge AI market. First, instead of focusing on chips or software, they’re taking a more holistic view. They provide end-to-end solutions that bring together hardware, software, and services. This approach is especially appealing to businesses that want to use edge AI but don’t have the in-house expertise to piece it together from scratch.

Lantronix’s second key strength is their focus in rugged, compact devices that can work in harsh environments. Their FOX3 series, for instance, is designed to operate in extreme temperatures ranging from -40°C to +85°C (-40°F to +185°F). This makes them suitable for deserts, arctic regions, or industrial settings like factories or oil rigs. Many of their devices are also built to withstand high levels of vibration and shock. They’re tested to military standards (MIL-STD-810G), making them ideal for use in areas prone to seismic activity.

For investors, these two strengths position Lantronix in an interesting niche. They’re not competing head-to-head with the tech giants in general-purpose edge AI. Instead, they’re carving out a space in specialized applications where their unique combination of ruggedness and integrated solutions is highly valued. Plus, their size makes them a potential acquisition target if a larger tech company decides they want to beef up their edge AI capabilities.

In the end, Lantronix’s inclusion in this list highlights an important trend in the edge AI market: it’s not just about who can make the fastest chip or the smartest algorithm. Success in this space may well come down to who can provide the most comprehensive, user-friendly solutions. And that’s exactly what Lantronix is aiming to do.

Hailo (Pre-IPO)

Hailo (pre-IPO) is an Israeli startup making waves in the edge AI chip market, and it’s gearing up for an IPO. Hailo’s claim to fame is their innovative AI architecture designed for edge devices. Instead of trying to adapt CPUs or GPUs to AI tasks, they’ve created a structure that mimics the neural networks it’s designed to run.

How? Well, traditional processors force neural networks to fit into a predefined architecture. But Hailo’s chip actually adapts its structure to match the specific neural network it’s running. It’s almost like the chip becomes a physical manifestation of the AI model. As a result, the company claims their chip can get 20 times higher performance per watt over traditional processors.

In edge AI, those efficiency gains can make all the difference. Edge devices often have stringent power and size constraints, with little room for cooling units. If you’ve ever run a game on ultra-high graphics, you’ll know how hot the GPU can make a computer. That type of power consumption simply won’t scale to larger edge AI applications like smart cities or energy grids.

Hailo’s flagship product, the Hailo-8, is a tiny chip about the size of a penny. But despite that small stature, it can perform up to 26 tera operations per second (TOPS) while consuming just a few watts of power. Compared to Ambarella’s 500 TOPS chip we mentioned earlier, this may seem unimpressive. But remember that Ambarella’s chip is much larger and intended for automobiles. Hailo’s chip, on the other hand, is much smaller. To put it in perspective, it’s comparable in performance to some data center-grade AI chips, but in a form factor that can fit into a smartphone.

Now, it’s worth noting that Hailo is still a young company. They were founded in 2017 and their chips only started shipping in volume recently. They’re going up against established semiconductor players, but their focus on edge AI gives them a unique position. For investors, Hailo represents a clean pure-play bet on the future of edge AI.

Tier 2: Companies with Strong Edge AI Focus

The edge AI stocks in this tier represent companies that have made significant commitments to edge AI, but it’s not their sole focus. These firms have diversified business models, with edge AI forming a major and growing part of their strategy. They offer investors a balance between focused exposure and the stability of more established business lines.

SoundHound AI (SOUN)

SoundHound AI (SOUN) is a fascinating player in the edge AI space with a very specific focus: voice AI technologies. If you’ve ever used a voice assistant like Siri or Alexa, you’ve got a basic idea of what SoundHound is working on. But they’re taking it to a whole new level, especially when it comes to edge computing.

SoundHound’s journey is pretty interesting. They started out way back in 2005 as a music recognition app, kind of like Shazam. But over the years, they’ve evolved into a powerhouse in voice AI. Their big selling point? A proprietary natural language understanding (NLU) technology that they claim is more advanced than competitors’.

For example, SoundHound has developed something called “Deep Meaning Understanding”. This allows their AI to understand context and handle follow-up questions without you having to repeat information. You could ask, “What’s the weather like in New York?” and then follow up with “What about next week?” without having to specify New York again. It might not sound revolutionary, but this kind of “intuitive” interaction is actually quite challenging for AI.

SoundHound’s technology runs directly on edge devices, without constantly pinging a cloud server. This is useful in voice AI for several reasons. First, it means faster response times. When you’re talking to a voice assistant, even a fraction of a second delay can make the interaction feel unnatural. Second, it means that sensitive voice data doesn’t need to leave the device. In an era where people are increasingly worried about their personal data, this local processing is a major selling point. Their technology has made its way into both consumer products (like Mercedes-Benz cars) and businesses (like White Castle restaurants).

For investors, SoundHound represents an interesting combo play on both edge and voice AI. They’re not purely focused on edge technologies, but expertise in voice AI gives them a separate win condition. Today, we’re already smart, voice-activated vacuums, fridges, and even vending machines. As this “smartification” trend continues, SoundHound could be uniquely positioned to capitalize.

Synaptics (SYNA)

Synaptics (SYNA) has been around since 1986, and if you’ve used a laptop touchpad or a smartphone touchscreen, there’s a good chance you’ve interacted with their technology. They’ve been a leader in human interface solutions for decades. But in recent years, Synaptics has pivoted hard into the world of edge AI.

What makes Synaptics stand out in the edge AI space is their focus on what they call “perceptive intelligence.” Perceptive intelligence goes beyond just crunching numbers or running algorithms. It’s about creating devices that can sense their environment in ways that mimic human perception.

For example, the company’s chips can integrate multiple types of sensors – touch, audio, vision, and even temperature or humidity. This allows devices to gather a more complete picture of their surroundings. More importantly, their chips can fuse data from all these different sensors in real-time. This is crucial for creating more context-aware and responsive devices. Plus, on-device processing makes this entire process faster and privacy protecting.

This concoction of features makes their products useful in unique ways. For example, imagine a smart home device that can hear and understand voice commands, protect the home from intruders, and even detect when a newborn baby is crying… all while respecting privacy by keeping data local. That’s precisely what their AudioSmart products do.

Synaptics is also doing interesting things with computer vision. and their VideoSmart chips (yes, their naming conventions lack some creativity). These context-aware chips are ideal for things like smart doorbells, security cameras, or even augmented reality glasses.

One of Synaptics’ key advantages is their long-standing relationships with consumer electronics manufacturers. They’ve been supplying components to companies like Samsung, Dell, Lenovo, and LG for years. In other words, they already have an inside track when it comes to integrating new AI capabilities into upcoming products. This existing customer base could be a significant growth driver as more and more devices incorporate edge AI.

(That said, there’s an important caveat here. While Synaptics has these established relationships, the tech world moves fast. Companies often switch suppliers or bring development in-house. For instance, Apple, once a major Synaptics customer, has largely moved away from using their components in recent years.)

Innodata (INOD)

Innodata (INOD) has been around since 1988, but their latest pivot towards AI and machine learning is intriguing. At its core, Innodata is a data company. They specialize in helping businesses manage, analyze, and leverage their data. But they also have developed an expertise in natural language processing (NLP).

Now, you might be wondering, “What does this have to do with edge AI?” Well, Innodata has been working on deploying these NLP models at the edge. In many industries, like healthcare or finance, keeping sensitive information on-device rather than sending it to the cloud is crucial for complying with data protection laws. Innodata’s edge AI solutions make this possible while still providing advanced NLP capabilities.

For example, one case study they’ve shared involves an undisclosed Fortune 500 insurance company. Innodata developed an edge AI solution that could process insurance claims in real-time, right at the point of submission. This AI could understand complex policy language, verify coverage, and even detect potential fraud… all without sending sensitive customer data to the cloud.

Innodata’s key differentiator is their expertise in handling complex, industry-specific data. For example, they’ve developed edge AI that can process legal documents, medical records, or financial reports in real-time. This is tough to do, because the AI must know industry jargon, regulatory requirements, and contextual nuances. Innodata tackles this challenge using their “human-in-the-loop” approach. They have a network of subject matter experts who continuously refine and validate these models.

For investors, Innodata offers exposure to edge AI in a way that’s fundamentally different from chip manufacturers or general AI platforms. They’re betting on the increasing value of specialized, industry-specific AI solutions that can work at the edge. This focus could insulate them from some of the intense competition in the broader AI hardware market.

Tier 3: AI Hardware Makers with Edge AI Angles

This tier includes some of the biggest names in tech hardware, now turning their attention to edge AI. While these may not be pure-play edge AI stocks, they represent companies with the resources and expertise to make significant impacts in the field. These stocks offer investors exposure to edge AI as part of a broader AI and tech hardware play.

Super Micro Computer (SMCI)

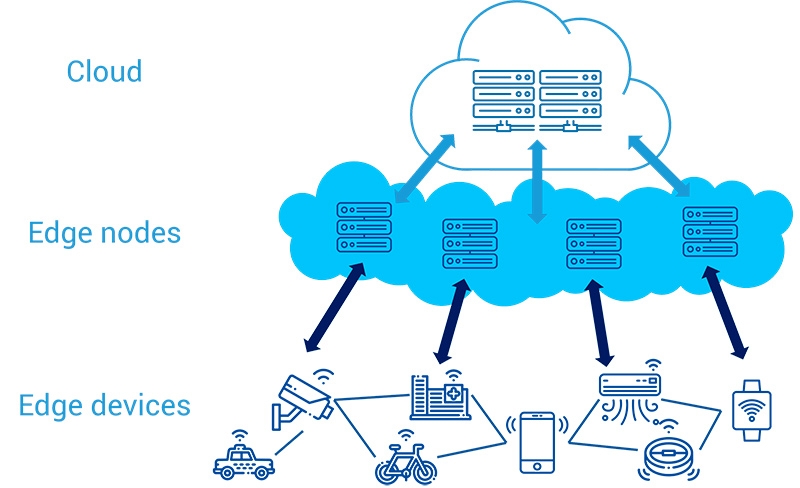

Super Micro (SMCI) is making a name for itself in the edge AI world by providing crucial hardware infrastructure. In short, they’re building the powerful servers that make edge AI possible. But wait a minute. Why would edge AI need servers? Isn’t the whole point to run AI on the device instead?

Well, some edge AI applications still need major computing power that exceeds what smaller devices can provide. For applications like smart cities or industrial IoT, there’s often a need for “edge data centers” – smaller-scale data centers located closer to the point of data generation. SMCI provides servers for these edge data centers. Also, in many edge AI scenarios, data from multiple sources needs to be aggregated before being used for more complex AI tasks. SMCI’s servers can handle this intermediate step.

So while it’s true that edge AI often runs on smaller devices, there’s still a role for more powerful, server-like hardware in the edge AI ecosystem. SMCI is positioning itself to provide this crucial infrastructure layer.

NXP Semiconductors (NXPI)

NXP (NXPI) is bringing edge AI to the automotive and industrial worlds, but with a crucial twist: they’re making it safe and secure. In industries where a glitch could mean disaster, NXP’s chips combine AI processing with real-time performance and functional safety features. After all, it’s not good enough to just make everything smarter. We also need to make smart things that won’t fail catastrophically.

For instance, let’s take self-driving cars. Sure, many AI chips can run obstacle detection algorithms. But NXP’s chips are actually designed to meet stringent automotive safety standards like ISO 26262. Specifically, NXP’s automotive processors feature:

- Hardware-based safety features: Such as lockstep cores that run the same operations in parallel to detect and correct errors.

- Built-in security modules: To protect against hacking and ensure the integrity of AI algorithms.

- Real-time processing capabilities: Essential for responding to critical events with minimal latency.

- Certifications: Reducing the certification burden for car manufacturers.

This dual focus on AI capability and safety compliance sets NXP apart in the edge AI race, especially in high-stakes applications where reliability is non-negotiable.

Qualcomm (QCOM)

Qualcomm’s (QCOM) edge AI play is all about piggybacking on the ubiquity of mobile devices. Instead of creating specialized AI hardware, they’re integrating AI capabilities directly into the chips that already power our smartphones. This approach has a unique advantage: it turns every phone into a potential edge AI device.

The real kicker? As 5G networks roll out, Qualcomm is positioning these AI-enabled chips to serve as the bridge between edge devices and the cloud. Imagine a world where your phone not only uses AI for its own functions but also acts as a local AI hub for other nearby devices. By leveraging their dominant position in mobile chipsets, Qualcomm is quietly laying the groundwork for a distributed edge AI network that could be as widespread as smartphones themselves.

NVIDIA (NVDA)

NVIDIA’s (NVDA) edge AI strategy is about bringing their graphics processing unit (GPU) expertise to the edge. Nvidia is already known as an AI hardware leader. But their strength is that they’re selling an entire ecosystem.

Sure, Nvidia’s Jetson modules are like mini supercomputers for edge devices, but they also offer robust platforms like EGX and IGX Orin, designed to bring data center-grade AI capabilities to the edge. The real magic, however, is in their CUDA and TensorRT platforms. These software tools allow developers to easily optimize AI models for NVIDIA’s hardware. In turn, this creates a powerful network effect. The more developers use NVIDIA’s tools, the more AI applications are optimized for their hardware, which in turn attracts more developers. This hardware-software synergy is NVIDIA’s unique advantage in edge AI.