The Inference Pivot.

The first phase of the AI boom was defined by training on the back of massive, centralized capex.

Hyperscalers—Microsoft, Google, Amazon—spent billions hoarding H100 GPUs to build bigger “brains.” This created the Nvidia trade and the data center buildout, a historic run to supply brute force compute.

We’re now entering the second, more durable phase: inference at the edge.

This is where AI models actually work. An autonomous vehicle deciding to brake. A factory robot detecting a micro-fissure in a pipeline. A pacemaker adjusting its rhythm to save a life.

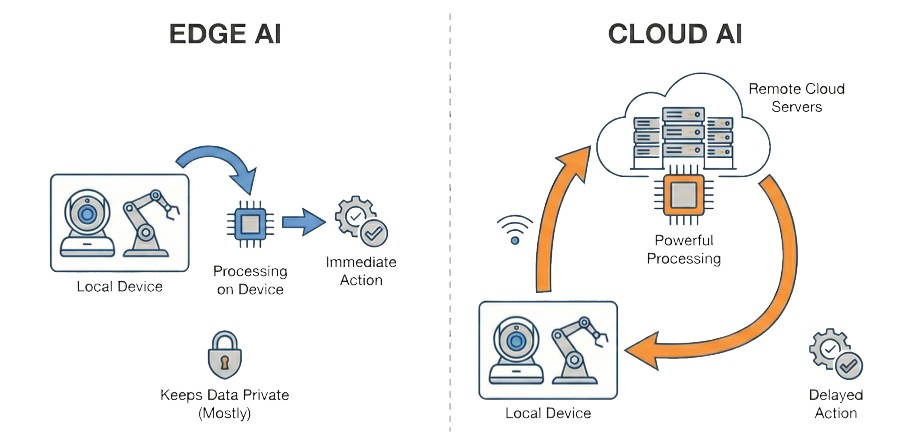

In these markets, cloud AI collides with the laws of physics.

Transmitting terabytes of raw sensor data from a smart manufacturing plant to a data center in Virginia, processing it, and sending a command back is simply too slow.

In high-stakes industrial and medical environments, a 200-millisecond latency loop can be a catastrophic failure point. Bandwidth costs to move that data are also prohibitive, and companies cannot afford to pay a “data tax” on every operational decision.

The thesis for edge AI is that the market will bifurcate. The heavy lifting of training stays in the cloud, but the high-volume, continuous work of inference moves to the endpoint. Global AI data centers number in the thousands, while edge devices track by the billions.

In this world, value accrues to edge AI stocks with specialized silicon: NPUs in phones and PCs, AI accelerators in autos, smarter MCUs and sensors in industry. A modest uplift per device, multiplied by immense unit volumes, can rival or surpass the data center opportunity over time.

Edge AI Architecture & IP

This segment of edge AI stocks sells the “blueprints.” Unlike Nvidia, which sells finished chips, these companies sell the logic, instruction sets, and interconnects that allow others (Apple, Qualcomm, Samsung, Tesla) to build edge silicon. The thesis here is structural leverage: You don’t need to pick the winning device maker if you own the architecture they all must use to run inference efficiently.

Arm Holdings (NASDAQ: ARM)

Arm is the de facto standard for power-constrained computing. While x86 (Intel/AMD) dominates the grid-connected data center, Arm’s RISC architecture monopolizes the battery-powered edge. The shift to inference forces a transition from Armv8 to the newer Armv9 architecture, which includes SVE2 (Scalable Vector Extension) designed to accelerate ML workloads on-device without torching battery life.

Arm is also moving up the value chain with “Compute Subsystems” (CSS), enabling them to license pre-validated blocks of silicon rather than just raw IP cores, effectively capturing more royalty value per device.

The 2026 Catalyst: “CSS” Unit Royalty. Watch for the rollout of the MediaTek Dimensity 9400 and Google Tensor G5 (likely in the Pixel 10) in 2026. These flagship mobile chips are expected to lean heavily on Arm’s latest Cortex-X cores and Compute Subsystems to handle on-device GenAI. Arm captures higher royalty rates per chip—moving from ~2% of chip value to closer to ~5%—as they sell complete validated subsystems rather than isolated cores.

Ceva (NASDAQ: CEVA)

If an edge device cannot connect (Bluetooth, Wi-Fi, UWB, 5G) or sense (audio/motion), it cannot infer. Ceva licenses the DSPs (Digital Signal Processors) and NPUs (Neural Processing Units) that handle this noisy, unstructured real-world data before it even hits the main processor.

As edge devices evolve from “smart” to “autonomous,” they require always-on sensing (voice triggers, spatial awareness) that must run on microwatts of power. Ceva’s NeuPro-M NPU IP allows OEMs to embed tiny, low-power AI engines directly into sensors and microcontrollers, bypassing the main CPU entirely.

The 2026 Catalyst: Microchip Technology Ramp. In late 2025, Ceva announced a strategic partnership to embed their NeuPro NPUs into Microchip’s massive portfolio of microcontrollers (MCUs). 2026 is the execution year where these AI-enabled MCUs begin sampling to industrial and automotive clients. This moves Ceva from consumer gadgets (earbuds/watches) into the high-volume, long-lifecycle industrial market.

Arteris (NASDAQ: AIP)

Arteris solves the “data movement” bottleneck. As edge SoCs (System on Chips) become more complex—integrating CPUs, GPUs, and NPUs on a single die—the physical wire connections between them become a traffic jam. Arteris sells Network-on-Chip (NoC) IP, which is essentially the automated traffic control system for silicon. Their IP organizes how data packets travel across a chip, ensuring that the NPU gets the data it needs from memory without stalling.

The 2026 Catalyst: “Zonal” Architecture. Car manufacturers are currently redesigning vehicle electronics from hundreds of isolated ECUs to a few centralized, super-powerful SoCs (Zonal Controllers). These chips require massive data throughput to process inputs from LiDAR, radar, and cameras simultaneously. Arteris is the dominant interconnect provider for these automotive SoCs (used by Mobileye, Bosch, and others). 2026 will see the start of production (SOP) for several Level 2+/Level 3 autonomous driving fleets that utilize these next-gen architectures.

Heavy Edge Inference

This segment of edge AI stocks covers “high-consequence” applications. Unlike a smartphone generative AI feature which can fail harmlessly, these chips control 4,000-pound vehicles moving at highway speeds or industrial robots working alongside humans.

The thesis here is determinism and safety. In this domain, “good enough” accuracy is unacceptable. These processors must deliver massive compute performance (tens to hundreds of TOPS) to process radar, LiDAR, and vision data in real-time, all while adhering to strict safety standards (ASIL-D) and staying within a passive cooling power budget.

NXP Semiconductors (NASDAQ: NXPI)

NXP is the incumbent king of the automotive microcontroller, but they are re-architecting the car for the software-defined era. The modern vehicle is transitioning from a messy web of 100+ distinct Electronic Control Units (ECUs) to a streamlined “Zonal Architecture” controlled by a few powerful processors.

NXP’s S32 platform is the cornerstone of this shift. It unifies the car’s compute, allowing a single chip family to handle everything from door locks to advanced driver assistance systems (ADAS). As vehicles become rolling computers, NXP silicon remains the standard for safety-critical execution.

The 2026 Catalyst: CoreRide Platform. NXP’s S32N and S32K5 processors—specifically designed for centralized zonal control—hit critical volume production in 2026. This coincides with major OEM launches of “Software-Defined Vehicles” (SDVs) that require this specific architecture to support Over-the-Air (OTA) updates and feature subscriptions. NXP shifts from selling $5 commodity chips to high-margin, integrated compute platforms worth significantly more per vehicle.

Ambarella (NASDAQ: AMBA)

Ambarella has pivoted from a “GoPro chipmaker” to a serious contender in high-performance autonomous driving silicon. Their edge is their proprietary CVflow architecture, which processes neural networks far more efficiently per watt than general-purpose GPUs. This efficiency is paramount for electric vehicles, where every watt consumed by the computer is a mile lost in range.

Ambarella creates the domain controllers that allow mainstream vehicles (not just robotaxis) to “see” and “understand” their environment using cameras and radar, offering Level 2+ autonomy (hands-free highway driving) at a price point mass-market OEMs can actually afford.

The 2026 Catalyst: CV3-AD Production. After years of development and sampling, Ambarella’s flagship CV3-AD domain controller family enters mass production in 2026. This chip is a beast, replacing multiple competitors’ chips with a single SoC that handles perception, fusion, and path planning. Watch for the rollout of production vehicles from Continental and other Tier-1 partners who have integrated the CV3.

Mobileye (NASDAQ: MBLY)

Mobileye is a pure-play bet on “solved” autonomy for vehicles. While others sell empty hardware canvases for OEMs to paint their own software on, Mobileye sells a closed, validated “black box” solution. Their moat is data: they have mapped billions of miles of global roads with unmatched precision.

They take a two-pronged approach to the autonomy market: “SuperVision” for premium consumer ADAS (eyes-on, hands-off) and “Chauffeur” for true autonomy (eyes-off). Mobileye’s is betting on legacy automakers struggling to build their own software stacks, then capitulating and buying Mobileye’s proven full-stack solution to remain competitive.

The 2026 Catalyst: “Chauffeur” Eyes-Off. 2026 is the scheduled arrival for Mobileye Chauffeur, the company’s “eyes-off” consumer autonomous system. Unlike current “hands-free” systems (like Super Cruise) that still require driver attention, Chauffeur allows the driver to legally check out (read a book, watch a movie) on highways. This system is expected to launch with key partners (like Polestar or Porsche) in 2026. Successful deployment here validates the “eyes-off” market and separates Mobileye from every other driver assist provider.

TinyML & Low-Power Logic

This segment of edge AI stocks is about “ambient intelligence.” These are the chips that will live in lightbulbs, thermostats, power tools, and appliances. The thesis here is volume over price. While an Nvidia H100 sells for $30,000, these chips sell for $1 to $10.

But where there are thousands of data centers, there will be billions of these endpoints. This segment won’t chase the most raw power, but rather optimize to deliver “good enough” inference on a coin-cell battery.

STMicroelectronics (NYSE: STM)

STMicroelectronics is a play on the democratization of AI in consumer devices. Their STM32 family is already the global standard for microcontrollers, used by nearly every embedded engineer on the planet. Their strategy is to make AI invisible by integrating it directly into this existing standard.

Instead of forcing engineers to learn complex new hardware, they are embedding proprietary “Neural-ART” accelerators directly into their STM32 chips. This allows a washing machine to “hear” a bearing failure or a drill to “feel” a material change without adding a separate, expensive AI processor. They are effectively upgrading the world’s appliance infrastructure to be AI-capable by default.

The 2026 Catalyst: STM32N6 Ramp. Watch for the volume availability of the STM32N6 in early 2026. This is the company’s first microcontroller with an integrated Neural Processing Unit (NPU) right on the die. This release marks a psychological shift for embedded engineers: AI becomes a standard peripheral, just like Bluetooth or USB, rather than a specialist add-on.

Lattice Semiconductor (NASDAQ: LSCC)

Lattice manufactures Field Programmable Gate Arrays (FPGAs)—chips that can be rewired via software after they leave the factory. In an AI world where algorithms change weekly, FPGAs offer a critical advantage: adaptability.

While a fixed chip might be obsolete in two years, a Lattice chip can be updated to run a newer inference model over the air. They dominate the small, low-power end of this market, often serving as the “first responder” in a system—waking up the main processor only when their low-power AI detects a face or a keyword, saving system power.

The 2026 Catalyst: Avant Platform. Lattice has spent the last two years launching its Avant mid-range platform to expand its total addressable market beyond simple control logic. 2026 is the year these design wins convert to revenue. As industrial customers finalize their next-gen motor control and vision systems, the ramp of Avant allows Lattice to capture $5-$10 sockets instead of their traditional $1-$2 sockets, expanding their share of wallet per device.

Nordic Semiconductor (OSLO: NOD, OTC: NDCVF)

Nordic are the market leaders in Bluetooth Low Energy (BLE), the language spoken by almost every battery-powered IoT device. Their pivot is moving from “just connectivity” to “connectivity + compute.” By beefing up the processing power in their chips, they allow devices to process data locally before broadcasting it. This creates a “smart radio” that doesn’t just transmit raw data but transmits insights, saving battery life that would otherwise be wasted sending useless noise.

The 2026 Catalyst: nRF54 Series. Watch the production of the nRF54 series, specifically the nRF54LM20A. This chip is a quantum leap for the company, featuring dual processors capable of running heavy ML workloads while maintaining ultra-low power consumption. It’s specifically designed to handle the new “Matter” smart home standard and on-device machine learning, giving Nordic a foothold in smart homes and medical wearables.

Synaptics (NASDAQ: SYNA)

Synaptics is historically known for touchpads and interfaces, but they have been pivoting to become a full-stack AI processor company. Their Astra platform is designed to be the brain of the “Enterprise IoT”—think videoconferencing bars, smart displays, and biometric security systems.

Unlike the tiny microcontrollers above, Synaptics chips run full operating systems (Linux/Android) and handle rich media, making them the go-to choice for devices that need to interact with humans visually and verbally.

The 2026 Catalyst: Astra SL2600. Watch the Q2 2026 General Availability of the Astra SL2600 series. These are “AI-native” processors with integrated NPUs designed specifically for the edge. The release will prove whether Synaptics can successfully compete against heavyweight incumbents like Qualcomm in the high-performance IoT space. Success here validates their multi-year pivot and opens up a high-margin revenue stream in industrial and enterprise automation.

The Edge Feeds the Cloud

Most frames view Edge AI as a localized efficiency play—a way to cut cloud bills and reduce latency. This misses the larger structural shift.

Edge AI is about execution, yes—but it’s also about curation.

Cloud models have already consumed the entire text-based internet. Their rate of improvement is stalling. To advance, they need “physical reality” data—video, acoustics, and motion. But the physical world generates too much noise to transmit.

Edge silicon is the gatekeeper. It acts as the filter that discards the 99% of mundane data and uploads the 1% of anomalies—the near-miss accident, the micro-fracture in a pipe, the unexpected voice syntax.

In this way, Edge AI does not compete with the Cloud. It feeds it.

This watchlist structures the Edge AI trade by function and risk. Architecture & IP (Arm, Ceva, Arteris) offers high-margin, royalty-based exposure. Heavy Edge (NXP, Ambarella, Mobileye) captures the high-value, safety-critical battleground of automotive and robotics. TinyML & Low-Power Logic (STM, Lattice, Nordic, Synaptics) provides the volume play, embedding intelligence into the billions of mundane devices that comprise the invisible edge.