Most investors only recognize exponential growth in hindsight. Think of Apple’s rise from near-bankruptcy in the late 1990s to a $3 trillion titan today, or Amazon’s journey from a humble online bookstore to the “everything store,” or Nvidia’s metamorphosis from a niche GPU maker into the face of the AI boom. These stories look obvious after the fact. The real challenge—and opportunity—is spotting exponential potential before it’s obvious to everyone else. In this primer, we explore nine fundamental exponential engines: the underlying cause-and-effect forces that drive exponential growth.

These engines aren’t conceptual; they’re real-world patterns that show up across technology and business. From the classic Moore’s Law to cutting-edge phenomena like quantum computing, each engine is a hidden driver of compounding value creation. For each, we’ll break down how it works, look at concrete examples, and draw out key takeaways. The goal is a practical primer on what makes “exponential” businesses tick and how to spot them early. Let’s dive in.

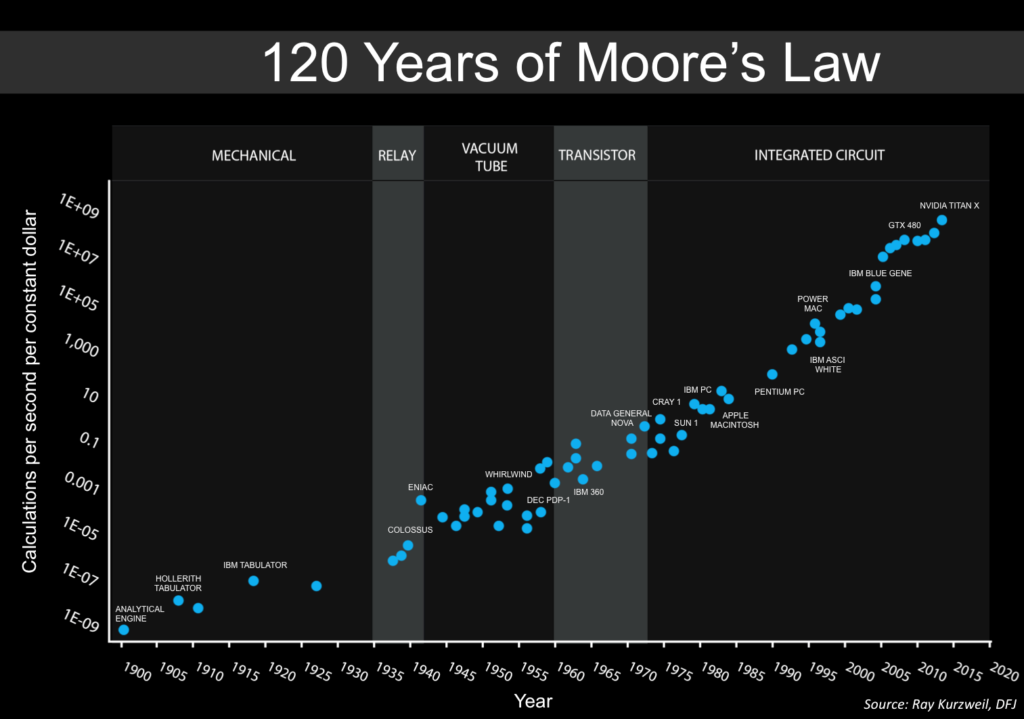

Miniaturization (Moore’s Law)

Modern tech owes much of its exponential progress to one simple idea: making things smaller. In 1965, Intel co-founder Gordon Moore observed that engineers could double the number of transistors on a chip roughly every two years, boosting computing power exponentially. This insight, later dubbed Moore’s Law, held true for decades and became the engine of the digital revolution. It’s why your smartphone today packs more computing muscle than a room-sized NASA supercomputer did in 1969.

Think of filling a jar with marbles versus sand. Transistors are like the “components” of computing. By shrinking them from marble-size to grain-of-sand-size, you can fit exponentially more into the jar without increasing the jar’s volume. Each shrinkage unlocked a wave of new applications: the PC boom of the 1980s, the mobile and cloud explosion of the 2000s, and today’s AI revolution all rode on the back of Moore’s Law. A company like Nvidia, for example, benefited heavily from this engine; its GPUs (originally meant to render graphics) became dramatically more powerful over time and found new uses in deep learning AI.

To be sure, you can’t shrink things forever and transistors are approaching atomic scales. But every time it looks like Moore’s Law might stall, engineers find a workaround. They invent new chip architectures and even stack chips in 3D layers to keep the exponential improvements coming. This means exponential growth in computing power is not over. History shows that sustained miniaturization mints winners (and millionaires) repeatedly. The lesson: don’t bet against engineers’ ability to do more with less.

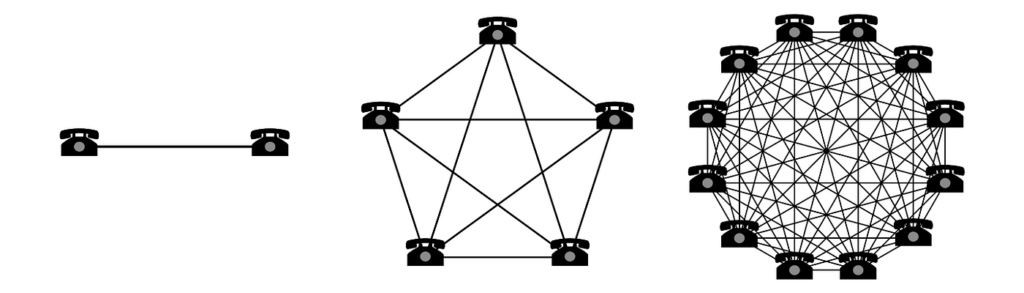

Network Effects

A network effect is perhaps the most famous exponential engine in business. The premise is deceptively simple: the value of a product or service compounds as more people use it. In other words, more users → more value → even more users. This creates a self-reinforcing growth cycle that can turn a scrappy startup into a natural monopoly. If you were the only person with a telephone, it’d be useless. But once everyone has one, the telephone network is invaluable. The same goes for social media, messaging apps, marketplaces, and many other platforms. They become indispensable when all your friends or customers or suppliers are on them.

Many iconic companies owe their dominance to network effects. Facebook became the default social network largely because all your friends joined. Amazon’s marketplace gets stronger as it attracts more buyers and sellers, creating unmatched selection and price advantages. Uber and Lyft grew fast by building both sides of the ride-hailing network (drivers and riders) in more and more cities. Once a network reaches critical mass, it’s brutally hard for a newcomer to dislodge it, because the incumbent’s product is inherently more useful (simply by having all the users). This is why network effects tend to lead to winner-take-all dynamics.

For a vivid example, consider WhatsApp. In 2014, Facebook paid an “eye-popping” $19 billion to buy the message app – a price many called crazy at the time. Fast forward a few years: WhatsApp surpassed 1.5 billion users (from 450 million in 2014) and became core to Facebook’s dominance in global messaging. The $19B price tag now looks like a steal, because of WhatsApp’s massive network effect. This “user begets user” flywheel is the magic. When each existing user brings in more than one new user (a “viral coefficient” above 1), usage can snowball geometrically.

Be cautious of fads (user growth that’s hype-driven but not self-sustaining). However, businesses with true network effects deserve special attention. Once a company has an entrenched network, it gains pricing power and a strategic moat. For example, sellers can hardly leave Amazon due to the sheer customer reach it provides, and users won’t leave WhatsApp because all their contacts are there. This yields durable cash flows for the leader.

Feedback Loops (Learning by Use)

Not all products wear out with use; some actually improve the more you use them. Data feedback loops (or learning systems) are an exponential engine where each user interaction makes the product smarter, creating a self-reinforcing improvement cycle. Think of Tesla’s fleet of vehicles on the road. Tesla gathers billions of miles of driving data from customer cars, which constantly train its self-driving AI. Even when drivers aren’t using Autopilot, their actions are feeding Tesla’s learning algorithms. So when one Tesla learns how to handle a tricky intersection, that knowledge can be uploaded and shared with every Tesla on the road. In effect, the product is appreciating in capability with each mile driven – a complete reversal of traditional product dynamics.

We see similar feedback loops in many AI-powered services. Google’s search engine refines itself by analyzing which results users click. Netflix uses viewing data from its 238 million subscribers to hone its recommendation engine, one reason 80% of Netflix viewing is driven by personalized suggestions. Spotify learns your music taste with every song you skip or heart, making your daily mixes and discovery weekly better over time. These services all leverage a positive feedback loop: usage → data → learning → better product → more usage.

The presence of strong feedback loops is a green flag that a company might enjoy accelerating competitive advantage. Key things to look for: Does the product leverage AI or algorithms that learn from user behavior? Do those improvements demonstrably drive higher engagement or retention? Is there a data asset accumulating that is hard for others to replicate? Companies that own unique, rich datasets (e.g. Tesla’s driving data, Google’s search queries, Amazon’s purchase patterns) have the fuel for these exponential learning cycles.

Importantly, check that the loop is actually working. Data by itself isn’t valuable unless the company can translate it into a better service (this often requires AI talent and infrastructure). But when the loop is firing on all cylinders, it creates a moat that gets deeper with every user. This often shows up in metrics like declining cost per outcome (for example, cost per ML inference dropping with more training data) or improving user satisfaction over time.

Learning Curves (Wright’s Law)

In 1936, an aircraft engineer named Theodore Wright noticed a curious pattern: every time total airplane production doubled, the labor cost per plane dropped by a consistent percentage. This was the first formulation of Wright’s Law, also known as the learning curve effect. It turns out this principle – costs falling predictably with cumulative experience – applies to many industries, from manufacturing to services. As companies (and industries) scale up production, they find ways to do things more efficiently: workers get better with practice, processes improve, supply chains optimize, and so on. The result is an exponential reduction in cost per unit as volume grows.

Consider the drop in cost for renewable energy technologies. Solar photovoltaic (PV) modules have seen about a 20% cost reduction for each doubling of cumulative output. Over the past four decades, this “learning rate” of 20% has compounded to a 99% drop in solar panel prices. Traditional utilities underestimated how fast solar and wind would get cheap, and were disrupted. Similarly, lithium-ion battery cells have become 97% cheaper since 1991, following a ~19% learning rate. A battery that cost $7,500 per kWh in the early ’90s cost only about $181/kWh by 2018. This drastic decline has been a game-changer for electric vehicles and grid storage, enabling mass-market EVs at affordable prices.

What’s crucial is that these cost improvements weren’t one-off. They were continuous and compounded exponentially as the industries scaled. Companies that ride steep learning curves can outpace competitors in cost efficiency. That opens up new markets, drives adoption, and can even create a separate exponential growth feedback loop (lower costs → more demand → higher volume → even lower costs). Some businesses also exhibit pseudo learning curves in the form of economies of scale – high fixed R&D cost but near-zero marginal cost, so average cost per user plummets as user count grows. In either case, it’s about making each unit of output cheaper as you scale.

Dematerialization

Sometimes the easiest way to grow exponentially is to remove material constraints entirely. Dematerialization is the shift from physical products to digital or software-based products. When you deliver value as bits rather than atoms, scaling becomes a whole different game. A classic example is the evolution from CDs and DVDs to streaming media. To grow a music service to millions of users, a CD retailer would have needed to press millions of discs, build warehouses, and set up distribution to stores or mail-order – a linear and asset-heavy process. But when music is delivered as MP3 files or streams, adding the next million users is practically free.

Consider Microsoft Teams during the 2020 pandemic. Virtually overnight, hundreds of millions of people switched to remote work and needed digital collaboration tools. Microsoft Teams was able to add 95 million new users in 2020, without building 95 million of anything. There was no way a physical-product company could scale that fast – but for a cloud service, it was (relatively) straightforward, since each new user is just another account on Microsoft’s servers. This is dematerialization in action: growth isn’t constrained by physical production.

We see this pattern across industries. Books went from paper to e-books/audiobooks; now a single Amazon Kindle device can hold a library. Banking and payments have increasingly dematerialized: apps and digital wallets now handle volumes that would’ve required thousands of brick-and-mortar branches in the past. Even money itself has bits-and-bytes alternatives (cryptocurrencies, digital payments) that move at the speed of the internet rather than the speed of armored trucks. Every time a product or service turns digital, it gains the potential for software-like scaling – exponential reach without commensurate costs.

Key indicators of dematerialization-driven exponentials: high gross margins (because costs don’t rise much with volume), rapid user growth without corresponding inventory growth, and industries where digital substitution is still in early innings. If, say, only 10% of an industry’s transactions are digital and the rest are still physical/paper-based, you can envision how a digital player could 10x by capturing that remaining 90%. The challenge is often timing and adoption – convincing customers to jump from a familiar physical solution to a digital one. But when the switch happens (often catalyzed by external events or generational shifts), the growth can be explosive.

Crowdsourcing (Platform Scale)

How do you grow a labor-intensive business exponentially without a corresponding growth in headcount? One answer: crowdsourcing. This engine involves opening up your platform to contributions from a broad community (users, partners, third-party developers), rather than doing everything in-house. When done right, it enables output and innovation at scale with minimal internal effort – essentially leveraging the crowd as a force multiplier.

The poster child for crowdsourcing is Wikipedia. In about two decades, Wikipedia has become the world’s largest encyclopedia by far, with over 6 million articles in English (and tens of millions across other languages) – all written by unpaid volunteers spread around the globe. A traditional approach (like Encyclopaedia Britannica’s model) would have required hiring legions of expert writers and editors, which simply doesn’t scale to millions of topics. Wikipedia’s genius was to create a platform where anyone could contribute knowledge, with just enough oversight to maintain quality.

Another example is Roblox, the gaming platform. Roblox provides the tools for users (e.g., teenage hobbyist developers) to create their own games, which other users can play. Today, Roblox has about 100 million daily active users and hosts over 40 million user-created games on its platform. This is an astonishing output of content – far more games than even the biggest traditional game studio could ever produce. Roblox Corporation doesn’t build those games; it simply runs the platform, offers development tools, and manages distribution.

Other examples abound. YouTube crowdsources video content from millions of creators, rather than its own studio. Airbnb is powered by millions of hosts (homeowners) signing up to offer stays, rather than building its own hotels. Shopify enables over two million independent merchants to run online stores on its platform, without holding its own inventory. In each case, the business provides infrastructure, and external contributors do the heavy lifting of content creation, asset provision, or innovation. The result: exponential growth driven by external input.

Platforms with crowdsourcing or user-generated content (UGC) can be extremely attractive – if they manage to reach critical mass. Early on, the “chicken-and-egg” problem is the biggest hurdle (you need contributors to attract users, but also need users to motivate contributors). But once the flywheel spins, these companies can scale rapidly with comparatively lean cost structures. Their growth is limited more by community size and engagement than by traditional operational limits.

Self-Replication (Biological Scale)

Nature has the original patent on exponential growth. Just consider how a single cell becomes a trillion-cell organism, or how one bacterium becomes billions in a petri dish. The engine at play is self-replication: systems that can make copies of themselves (or of their functional output) can scale at an exponential rate. In recent years, scientists and entrepreneurs have been harnessing this principle via synthetic biology and related fields, effectively turning biology into a manufacturing technology. The idea is to program living cells (microbes, yeast, etc.) to produce useful products and then let them multiply. Instead of building more factories to increase production, you simply grow more cells.

Imagine you need to double the output of a traditional factory making, say, plastic or cars. You’d likely need to build another factory – a huge capital project. But if you engineer a strain of yeast to produce a valuable chemical (like a medicine, enzyme, or food ingredient), scaling up production can be as simple as preparing a larger fermentation tank with more food, and letting the yeast cells replicate. The “factory” (each cell) copies itself millions of times over, at virtually no cost except raw feedstock. In other words, microscopic workers that reproduce do the scaling for you.

This is already happening: companies are brewing insulin, cannabinoids, fragrances, and even lab-grown meat using microbial or cell cultures. Take the example of Ginkgo Bioworks, a synthetic biology company. Ginkgo’s business is engineering microbes to produce things like specialty chemicals and proteins. After designing a microbe with the desired genetic instructions, they can scale from a tiny lab sample to a 10,000-liter bioreactor of that organism fairly quickly, since the organism clones itself through cell division. The costs don’t scale linearly with output, because biology is doing the heavy lifting.

The rise of synthetic biology and self-replicating manufacturing is an exciting frontier. If a company’s production method is self-replicating—whether it’s cells, or even self-replicating machines in the future—it can achieve scale at unprecedented speed and low cost. Key areas to watch: pharmaceuticals (cell therapies that expand, or drugs produced by engineered bacteria), agriculture (lab-grown meat or fermented proteins scaling up), and materials (microbes producing plastics, textiles, etc.). Though keep in mind that not all processes scale perfectly; sometimes yields drop-off or bioreactors face issues at larger volumes.

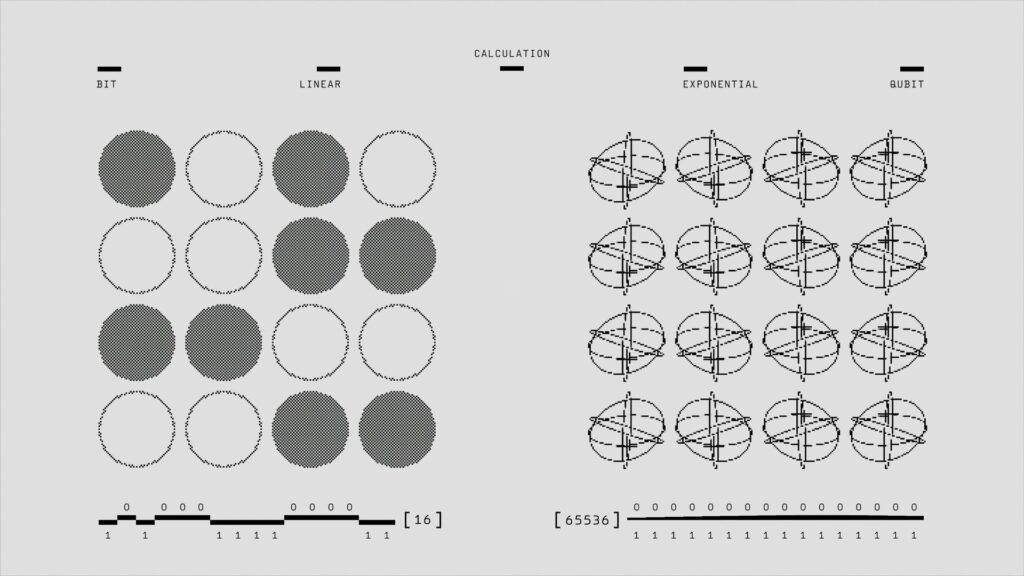

Quantum Parallelism

One of the most radical exponential engines comes from quantum physics, of all places. Quantum parallelism refers to the way quantum computers can explore an exponentially large set of possibilities simultaneously, rather than one-by-one like a classical computer. It’s rooted in two quantum principles: superposition (a qubit can be 0 and 1 at the same time) and entanglement (qubits can be linked so that operations on one affect the others instantly). In practical terms, if you have n qubits, they can collectively represent 2n states at once. Each additional qubit essentially doubles the potential computing power: 10 qubits ≈ 210 = 1024 states in parallel; 20 qubits = about a million states; 50 qubits = over a quadrillion; and so on.

Consider a classic analogy: the legend of the king and the inventor of chess. The chess inventor asked as a reward one grain of rice on the first square of the chessboard, two on the second, four on the third, and so forth – doubling each square. The king readily agreed, not realizing that by the 64th square, the owed rice would exceed all the grain in the kingdom (actually, all grain on Earth).

A 64-qubit quantum computer, in theory, can juggle 264 ≈ 1.8×1019 states at once. This is why certain problems like factoring large numbers (breaking encryption) or simulating complex molecules suddenly become tractable with enough qubits. It’s like being able to escape a maze by exploring every path simultaneously rather than walking each one sequentially.

Quantum computing represents a more speculative but potentially seismic exponential force. The technology is still in its early stages (hundreds of physical qubits with noise and errors), but progress is steady. Researchers are now pushing toward devices with 1000+ high-quality qubits. Every incremental advance in qubit count and fidelity is exponential progress, in the sense that the state space grows multiplicatively. Companies like IBM, Google, IonQ, Rigetti and others are in this race. The holy grail is a fault-tolerant quantum computer that can reliably outperform classical supercomputers on useful tasks, a milestone deemed “quantum advantage.”

There are also the second-order effects: consider the industries that will be transformed once quantum hits a tipping point. For example, encryption-heavy industries (security, finance) would need to overhaul infrastructure and companies providing “quantum-safe” cryptography could see exponential demand. Pharma companies that leverage quantum chemistry for drug design might outpace those that don’t. Cloud providers offering quantum computing as a service could add new revenue streams quickly as demand kicks in. These are longer-horizon considerations, but important in a 5-10 year view.

Combinatorial Innovation

Finally, the most surprising innovations often happen not in a vacuum, but at the intersections. Combinatorial innovation is when new breakthroughs emerge by combining existing technologies in novel ways. As our “toolkit” of technologies expands, the number of possible combinations grows exponentially – much like adding more Lego bricks allows for dramatically more possible builds. This means the pace of innovation accelerates over time, because each new tech doesn’t just add its own value, it also multiplies possibilities by interacting with other tech.

A clear example is the development of the smartphone. The smartphone wasn’t just one invention; it was the convergence of several mature technologies: mobile cellular networks, touchscreens, portable computing processors (thanks to miniaturization), GPS satellites for location, digital cameras, and the internet. Each of these existed on its own, but when fused into a single device (the iPhone being the watershed moment), they created an entirely new product category and economy. The smartphone gave birth to countless new markets (apps, mobile services, social media on-the-go) that wouldn’t have been possible without that combination.

Similarly, consider self-driving cars: they are essentially AI + advanced sensors + robotics + modern computing. AI algorithms process data from LIDAR/radar/cameras (sensors) and control the vehicle (robotics), all made feasible by powerful onboard computers. None of these components alone would yield a self-driving car; it’s the combo that does it. As AI gets better and sensors cheaper, the fusion hits a tipping point where autonomous vehicles become viable almost overnight.

Other examples: Blockchain + IoT + cloud computing have been combined to tackle supply chain tracking (e.g., ensuring the provenance of goods). CRISPR gene editing + big data + cloud labs are combining to accelerate biotech discoveries. 3D printing + material science + AI design algorithms are creating new ways to manufacture components that were impossible to machine before. In each case, multiple separate fields are being brought together to unlock an exponential increase in solution space. This is one reason why many observers feel like technology is evolving faster than ever – it’s not just one stream of progress, it’s many streams interweaving and cross-pollinating.

Putting It All Together: Investing in the Exponential Age

All of the engines above share a common theme: they amplify progress as scale increases. In traditional linear businesses, growing output requires roughly commensurate growing input (more employees, more capital, more inventory). Exponential engines break that trade-off. They allow a company to achieve nonlinear outcomes – more from less, or much more from only a bit more.

We are living in an exponential age, where entire markets can appear and mature in just a few years. Think of the smartphone app economy circa 2008-2015, or cryptocurrency in the 2010s, or AI model-based businesses in just the last couple of years. Recognizing the forces that drive those curves can give you an edge in both finding winners and avoiding losers.

A few key takeaways for investors:

- Look for compounding signals. Early on, exponential growth can be hard to distinguish from noise. But some telltale signs include rapidly declining costs (e.g. a cost curve bending down faster each year), snowballing user adoption (user growth rate accelerating, not just growing linearly), or iterative improvement speeding up (product updates or tech breakthroughs happening more frequently). Conversely, if a company’s growth requires linear increases in headcount or capex every year, it may struggle to keep up in exponential markets.

- Bet on mechanisms, not just products. Technologies and fads come and go, but the underlying forces often persist. For example, you might have missed one social media stock, but the network effect principle will generate other opportunities (in maybe entirely new arenas like enterprise SaaS or crypto networks). If you missed the first solar boom, the learning curve insight could still apply to new energy tech (like hydrogen or nuclear fusion) with similar dynamics. By focusing on the engines, we can spot analogies in emerging fields.

- Diversify across exponentials. Each individual exponential trend has uncertainty – maybe quantum takes longer than expected, or a particular network effect doesn’t pan out due to regulation. But as a whole, exponentials are reshaping the economy. Consider an “exponential technologies” basket or strategy. This way, you’re basically long human innovation. History has proven: that’s a good place to be.

- Be early (but not too early). The big returns often accrue to those who invested before the exponential inflection point. That said, being too early can test your patience or capital (classic example: many investors bet on AI in the 1980s and 90s and lost money, only for AI to explode later). The key is to monitor enabling factors. For instance, if you knew in the mid-2010s that data volumes were exploding and GPU prices were dropping (Moore’s Law) and researchers were making breakthroughs (feedback loops in deep learning), you had conviction that AI’s time was coming. By 2016-2017, that proved out with the success of new AI applications. Similarly, watch for when quantum computers solve a real problem, or when synthetic biology actually produces a hit product. That could mark the start of the steep climb.

- Be open-minded but diligent. An exponential age demands exponential thinking. Linear thinking might say “this stock is overvalued at 50 P/E because growth will revert to mean.” Exponential thinking asks “could this company actually 10x its market in the next 5 years due to network effects or cost declines, justifying an even higher valuation?” It’s not about buying hype; it’s about understanding that traditional metrics may not apply during certain high-growth phases.

Finally, a word of caution: exponential growth does taper eventually. Each engine has limits: network effects can saturate or face backlash, learning curves can plateau, quantum computing can face engineering ceilings, etc. So we shouldn’t expect trees to grow to the sky; instead, we can aim to ride them while they’re in the high-growth phase, while being ready to rotate when the story changes.